AI is changing how software gets built every day. But the real question is whether it improves delivery speed, quality, and costs in measurable ways. So, in this article, you’ll see how AI tools affect daily engineering work, career paths, and business outcomes.

First, let’s look at practical uses in development.

How AI Is Used in Software Development

A lot of teams are using AI to manage projects, write code, and keep systems stable. Well, these are the most common areas where you can see a direct impact:

Coding

With AI-assisted development, your integrated development environment (IDE) becomes more than a text editor. Tools like GitHub Copilot and Cursor suggest entire functions, enforce coding styles, and even write documentation alongside your code snippets. This reduces the repetitive work of coding, though it does lead to more oversight, since they don’t guarantee complete accuracy.

Planning & Project Management

AI helps you estimate delivery timelines, prioritize backlogs, and flag risks earlier. This reshapes how you allocate resources and manage stakeholder expectations. In fact, organizations that apply AI to backlog prioritization report up to 30% faster time-to-market and higher stakeholder satisfaction.

Design & Prototyping

AI tools can now generate wireframes, adjust designs for accessibility, and create multiple prototypes at scale. Instead of spending days refining layouts, you can test variants within hours. Research from Adrenalin shows that 62% of UX designers use AI in their routines. This boosts user engagement by up to 30% through hyper-personalization.

Testing & QA

AI supports automated test generation, predictive bug detection, and regression testing powered by machine learning. That means faster test coverage and earlier feedback loops. This directly reduces the cost of defects found late in your pipeline.

Deployment & Monitoring

AI-driven monitoring can detect anomalies, forecast system failures, and fine-tune CI/CD pipelines. This allows you to prevent downtime instead of reacting after the fact, which has obvious financial and customer trust implications.

Maintenance & Security

From dependency updates to vulnerability scanning, AI helps you manage technical debt and reduce exposure to risks. According to SQ Magazine, 61% of cybersecurity teams have adopted AI-powered threat detection.

This shift has reduced response times by 44% and let teams identify insider threats 32% faster than manual reviews. As a result, they could lower breach risks and strengthen their production stability.

AI in Software Development Examples

Several platforms are already showing these changes. For example:

- GitHub Copilot reduces toil in coding and testing.

- Google DeepMind’s AlphaDev and AlphaEvolve optimize algorithms and infrastructure.

- IBM Watsonx provides real-time code assistance inside IDEs.

At Axify, some of our peers already use tools like JetBrains Junie, Zed AI, Claude Code, and Cursor alongside Copilot.

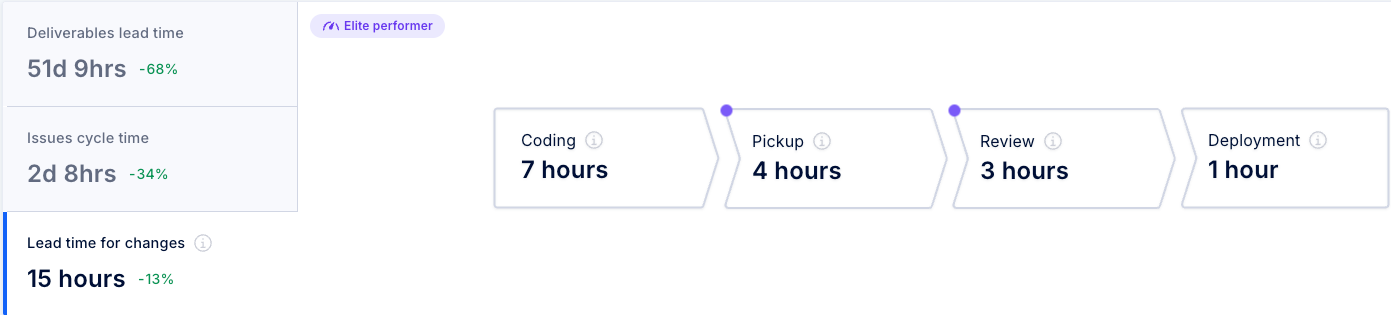

Axify doesn’t have a dedicated AI feature just yet, but our Value Stream Mapping gives you a clear view of the whole delivery pipeline from start to finish. It tracks developer productivity metrics in a way that makes it easy to spot bottlenecks and areas for improvement. That means you can actually measure how AI is affecting your workflow: where it’s speeding delivery and improving quality, and where it might be slowing things down or causing friction.

How AI Impacts Software Engineering.

AI has evolved from being a side experiment in engineering to becoming part of everyday reality. According to Google’s 2025 DORA Report, nearly 90% of software development teams now use AI in their workflows. Its impact goes far beyond productivity—reshaping how teams work, which skills are in demand, and how careers evolve. These findings reveal measurable shifts that organizations must start preparing for today.

AI Impact on Daily Workflows

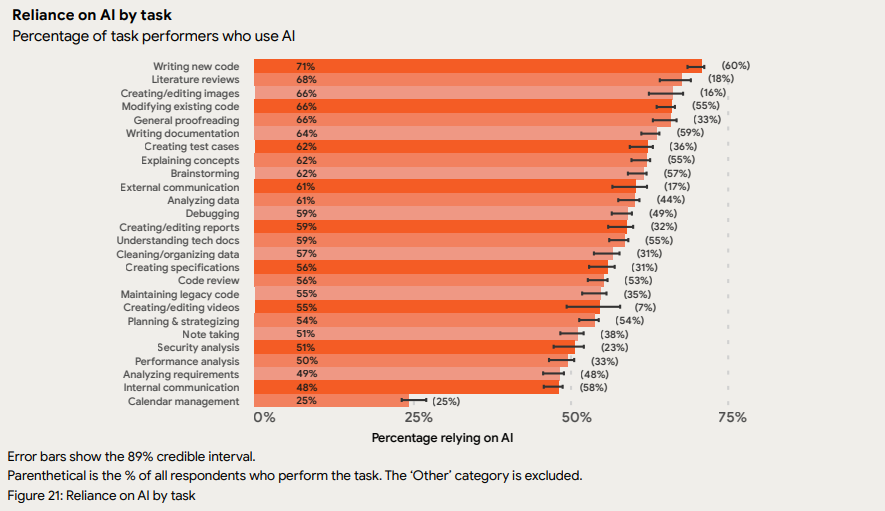

The biggest change you notice is in day-to-day coding. Engineers use AI for writing new code, modifying existing files, summarizing information, generating tests, and debugging issues. According to Google's report, 71% of engineers who write code now rely on AI to support that work. Usage is also high for proofreading (66%), editing images (66%), and reviewing research literature (68%).

The outcome is faster throughput in the development process, but not always better stability. Faster coding means more output, but speed doesn’t always equal quality. AI tends to generate code that’s longer, more repetitive, and sometimes unnecessarily complex.

It can even “hallucinate” functions that don’t exist or over-engineers solutions that a human would’ve kept simple. Without proper validation, these issues can slip through and pile up, making systems harder to maintain down the line..

This means AI doesn’t replace testing or reviews. It reshapes where you apply human judgment. Leaders who set guardrails here protect quality while still reaping efficiency.

AI Impact on Developers’ Skill Sets

The tools themselves are not the long-term differentiator, but your team’s skills are. Demand is rising for prompt ideas, data literacy, and systems thinking. In practice, that means your engineers must learn how to frame AI inputs clearly, evaluate outputs critically, and understand how those outputs affect downstream workflows.

The DORA report notes that 59% of developers believe AI improved their code quality, and more than 80% reported higher productivity. Still, over 40% said gains were only slight.

That gap reflects a skill divide. Engineers trained to guide AI and check its results benefit more, while others plateau quickly. That’s your signal to invest in upskilling rather than assuming AI alone will deliver transformation.

AI Impact on Collaboration & Roles

You also see a shift in how engineers interact with product managers and designers. AI has automated many “glue tasks” such as backlog triage, meeting summaries, and drafting documentation. That changes the rhythm of collaboration. Instead of spending hours on updates, engineers can spend more time on coding.

Adidas provides a clear case study in the report.

Teams with fast feedback loops and loosely coupled architectures reported 20-30% productivity gains and a 50% increase in “Happy Time.” This means more coding, less administrative effort. On the other hand, teams with rigid processes or weak practices saw minimal benefit.

The truth is that AI is not a magic fix. It amplifies strengths and exposes weaknesses in how you manage collaboration and architecture.

Will AI Replace Programmers in 10 Years?

The short answer: no. However, AI is likely to replace how programmers work.

In software development, the goal has never been to write code for the sake of it. The real objective is to deliver value to users as fast as possible.

Teams that understand this will never use AI not as a cost-cutting shortcut, but rather as an accelerator.

Remember: Those who see AI as a way to eliminate developers will lose to those who see it as an investment. AI is a way to put more value, faster, in the hands of their users.

The data supports this transition.

According to the 2025 DORA report, 90% of today’s engineers already use AI at work. However:

- Only 7% always rely on it when solving problems

- 39% use it occasionally

The gap shows where the opportunity lies: the number of AI users is high, but adoption into daily workflows is still shallow.

In the coming years, the best teams will build around AI—blending human creativity, product intuition, and machine intelligence into a seamless loop of delivery.

AI Impact on Software Developer Salaries

The clearest financial impact is compensation. Salaries for AI-related roles are rising faster than traditional developer roles. According to ZipRecruiter, the average AI developer salary in the U.S. is $129,348 in 2025, with top earners reaching $158,500. Salaries vary widely by location, with places like Cupertino, CA, and Nome, AK paying over 20% above the national average.

At the same time, analysis from Interesting Engineering shows that entry-level AI roles pay 128% more than non-AI roles. Also, overall AI-related jobs command 78% higher salaries than other tech positions. That has widened the tech versus non-tech pay gap by 36%.

The message is clear. Engineers who upskill in generative AI, deep learning, and related areas position themselves for outsized financial returns.

Benefits of AI in Software Development

There are plenty of benefits when AI is used in software development. These findings show you where the value is strongest and what outcomes you can realistically expect.

Higher Productivity

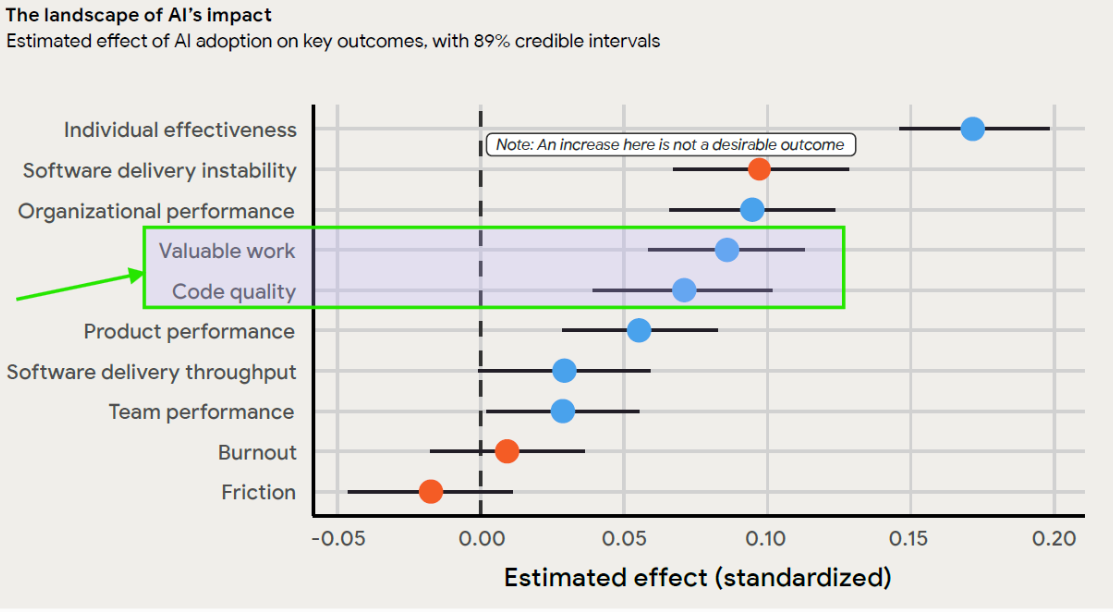

The 2025 State of AI-Assisted Software Development report shows that 90% of teams now use AI in their workflows, with over 80% reporting higher productivity. Individual effectiveness is also increased, though that’s not always the case for teams that work in small batches.

The report also notes that this indicator is not the most worthwhile to follow, though:

“More importantly, we argue that individual effectiveness should not necessarily be pursued as a goal in and of itself. Rather, individual effectiveness is a means to realize greater organizational, team, and product performance, and improved developer well-being.”

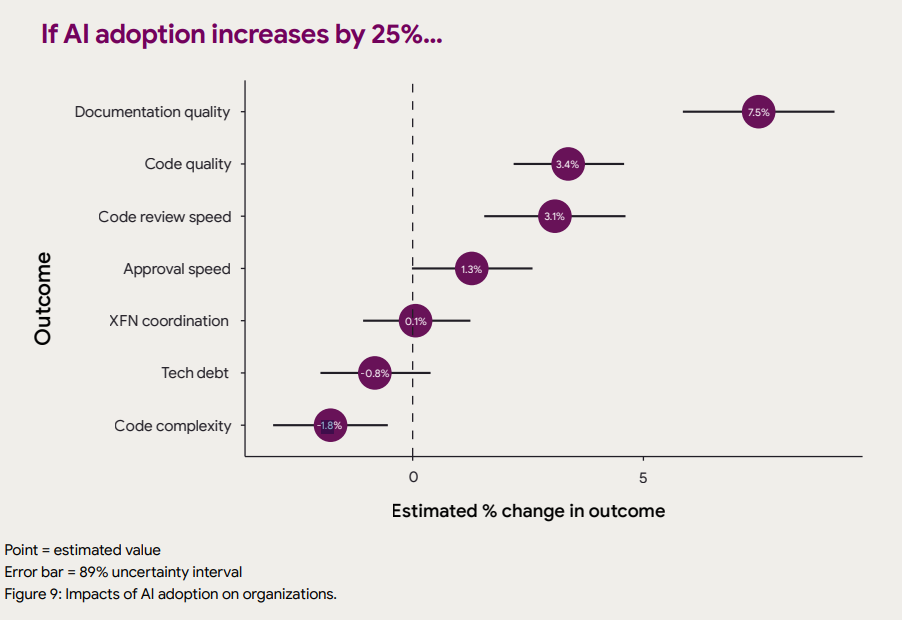

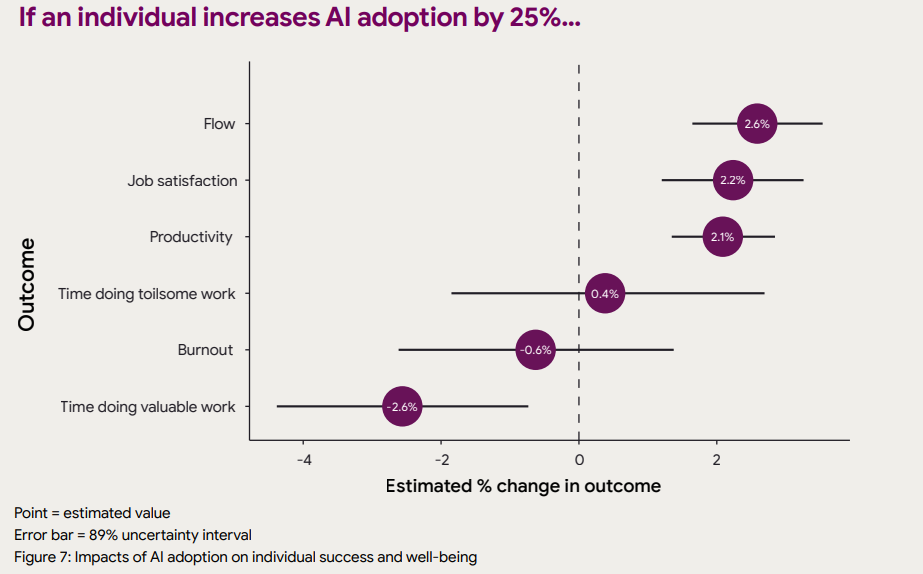

That said, according to the 2024 Accelerate State of DevOps Report, a 25% increase in AI adoption is linked with consistent improvements across engineering performance.

So, how can we make sense of these seemingly contradictory perspectives?

The Axify perspective: AI doesn’t create performance, but it can amplify it. Its real value depends on solid delivery foundations: reliable CI/CD pipelines, healthy internal platforms, and clear visibility across the value stream. When these exist, AI multiplies performance instead of magnifying dysfunction.

Better Documentation Quality

Clear documentation typically makes or breaks handoffs and long-term maintainability. The 2024 report also found a 7.5% increase in documentation quality when teams increased their AI adoption by 25%. This means you spend less time clarifying ambiguous tickets or reworking outdated specs.

That stat remains a useful benchmark.

The 2025 findings don’t replicate a specific documentation metric, though they do show that 64% of task performers use AI to write documentation.

Besides, DORA’s new framing emphasizes that AI tools only work if your team already has solid doc standards, internal style guides, clear naming conventions, and well-structured API design.

For example, GitHub Copilot and IBM Watsonx Code Assistant can generate in-line explanations or draft API references automatically. This allows your engineers to refine rather than start from scratch.

But if documentation is inconsistent or knowledge is fragmented, AI may propagate or magnify those gaps.

Higher Code Quality

The 2024 DORA report showed a 3.4% improvement in code quality correlated with a 25% increase in AI adoption. One reason is that AI-assisted linting, static analysis, and style enforcement can reduce low-level issues before code review.

The 2025 data confirms that AI adoption improves software delivery throughput (teams are shipping faster), but also slightly increases delivery instability. This means the potential for quality variation rises if guardrails are weak.

However, there is a slight overall increase in code quality and valuable work produced across respondents:

This shift has a financial impact as well.

Every bug caught during coding instead of in production avoids rework costs and customer-facing incidents. That means fewer fire drills and more predictable delivery.

Faster Code Reviews

AI is now a key player in most review workflows. According to the 2025 DORA report, 56% of developers use AI to assist in code reviews — often relying on tools that flag syntax issues, identify missing tests, or summarize diffs before human approval.

Still, despite these advances, review bottlenecks remain one of the most common sources of delivery delays.

Luckily, the 2024 data indicates a 3.1% increase in code review speed correlated with a 25% higher AI use. This shortens the feedback cycle, which improves learning and reduces context switching for developers. This accelerated loop keeps your team’s momentum high and throughput consistent.

The Axify perspective: Our Value Stream Mapping tool helps you understand how your code review metrics connect to end-to-end delivery performance. In other words, you can see whether faster reviews actually reduce lead time and increase deployment frequency. This visibility helps you avoid local optimizations that hide systemic bottlenecks.

Faster Approvals

Approvals (whether for merges, deployments, or sign-offs) usually sit outside pure coding work.

AI can automate consistency checks or pre-validate compliance rules by cutting idle time. In fact, the 2024 DORA report noted a 1.3% improvement in approval speed.

Faster approvals mean features reach customers earlier, helping you respond quicker to market needs.

While the 2025 DORA report doesn’t quantify approval speed, its findings show that AI-accelerated development demands faster, more automated validation loops.

DORA’s AI Capabilities Model reinforces this need, highlighting continuous evaluation and clear AI policies as foundations for stable, trustworthy delivery.

Reduced Code Complexity

Simplifying codebases is one of the harder gains to measure, yet AI shows progress here as well. The 2024 DORA report found a 1.8% decrease in complexity, likely due to AI proposing more concise refactoring options and pattern standardization.

This reduces long-term maintenance costs and lowers the barrier for onboarding new team members.

The 2025 DORA report doesn’t quantify code complexity directly.

However, we remind you that it does explain that AI amplifies your existing system design, good or bad.

Therefore, teams with strong architecture, modular platforms, and well-defined coding standards experience cleaner, more consistent refactoring patterns when using AI. Others see technical debt grow faster as automation compounds poor structure.

Higher Job Satisfaction

The 2024 DORA report found a 2.2% rise in developer satisfaction when AI tools were part of the workflow. While modest, this effect is important. Developers feel less weighed down by repetitive work, which helps reduce attrition risk. For leaders facing rising salary pressures, this is a lever to improve retention without relying solely on compensation.

The 2025 DORA report replaces last year’s small satisfaction gains with a deeper view of developer well-being. Instead of a single metric, it measures burnout and friction as core indicators of team health.

The results show a clear divide: high-performing teams report low burnout and minimal friction, while under-resourced or process-heavy teams experience the opposite. Roughly 40% of surveyed teams operate in sustainable, low-burnout environments, proving that speed and well-being can coexist.

Axify’s perspective: AI’s impact on satisfaction depends on system health: the same technology that lightens workloads in disciplined teams can exhaust developers in unstable ones. Value stream management and strong internal platforms create a buffer against delivery friction. This leads to faster feedback, smoother hand-offs, and a stronger sense of progress for developers.

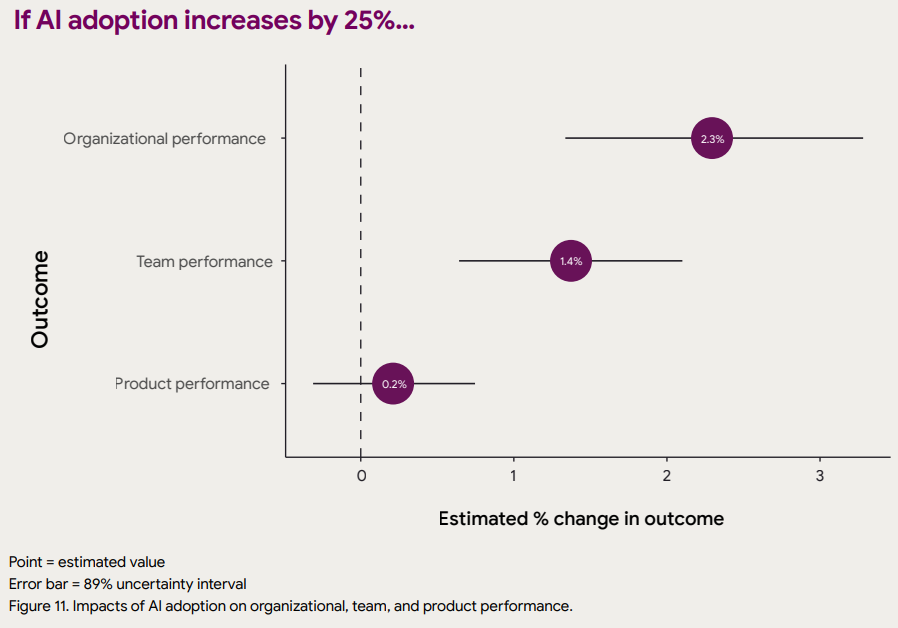

Stronger Team and Organizational Performance

When viewed at a higher level, the 2024 DORA report showed 1.4% improvement in team performance and 2.3% in organizational performance.

The 2025 DORA Report shows a clear and measurable link between AI adoption and performance at both the team and organizational levels.

Teams that use AI effectively report higher collaboration scores and stronger delivery performance, while organizations with mature AI integration see tangible improvements in overall business outcomes.

In fact, AI adoption demonstrates one of the strongest positive effects across all DORA metrics—especially in areas of team productivity, product quality, and organizational performance.

These gains compound in larger organizations and are most visible in revenue-driven outcomes such as faster release cycles and reduced incident costs.

The Axify perspective: Many companies implement AI thinking they’ll cut costs, but AI isn’t a cost saver; it’s a value accelerator. Winning organizations treat it as a strategic investment instead of a mere replacement of other resources.

Faster Decision-Making and Planning

The 2025 DORA report shows that AI is increasingly used for higher-level engineering decisions. Developers use AI for:

- Planning & strategizing (54%)

- Analyzing requirements (49%)

- Performance analysis (50%)

- Analyzing data (61%)

After all, good AI forecasting models can help you assess backlog health, delivery risk, and timeline accuracy. When integrated with project management tools, they give you earlier warning of bottlenecks and resource misallocations. This allows you to reallocate effort proactively instead of reacting after slippage.

Better User Experience and Personalization

AI is also shaping the end product. From UX design recommendations to dynamic content personalization, teams can test and adapt user journeys faster.

For example, AI-assisted A/B testing lets you validate new layouts or flows with minimal engineering effort. That means faster response to customer behavior, which strengthens the competitive position.

DORA’s 2025 data underline this as well.

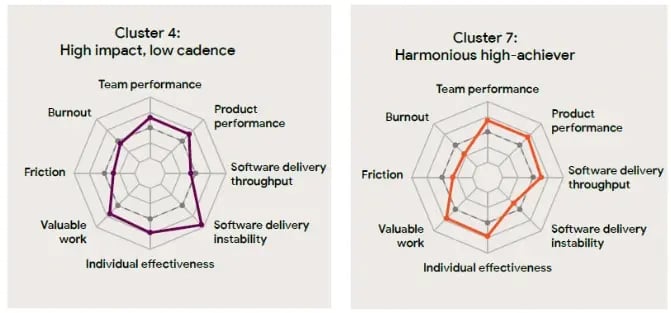

To analyze AI’s impact, DORA 2025 groups engineering teams into seven clusters. These are team archetypes that reflect how different patterns of speed, stability, collaboration, and burnout interact.

Each cluster represents a common operating model rather than a maturity level, so organizations can compare performance drivers across contexts.

The report links higher AI adoption to stronger product performance and user value, particularly in:

- Cluster 4, High Impact, Low Cadence: Teams that deliver fewer but high-value releases. They emphasize rigorous validation, user-centric design, and deliberate delivery pacing that maximizes customer experience.

- Cluster 7, Harmonious High-Achievers: Highly effective, low-burnout teams with strong collaboration, stable systems, and consistent feedback loops. Their balanced workflow lets them integrate AI-driven insights safely and continuously into UX improvements.

These correlations show that AI is likely to improve user experience the most when teams combine clear validation practices, delivery stability, and human-centered design discipline.

Risks of AI in Software Development

While AI adoption brings measurable benefits, the 2024 Accelerate State of DevOps Report also shows risks that you can’t ignore.

These can directly affect predictability, customer experience, and ultimately business outcomes.

Here are the key watchpoints that the data reveals.

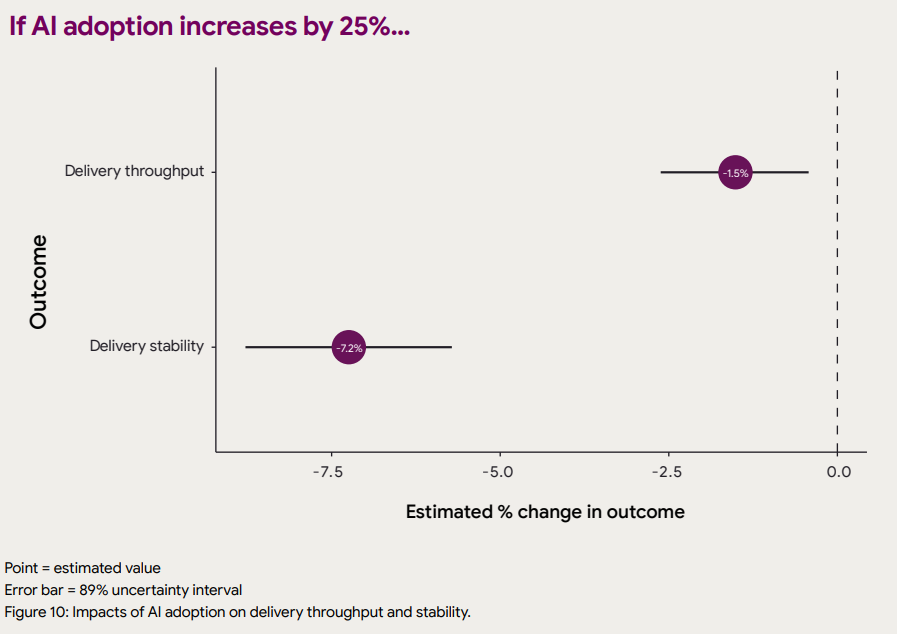

Delivery Stability and Throughput

According to the 2024 DORA report, AI in software development leads to an estimated 1.5% drop in delivery throughput and a 7.2% reduction in delivery stability.

The new 2025 report shows that AI adoption now improves software delivery throughput, a key shift from last year.

However, it still increases delivery instability.

This suggests that while teams are adapting for speed, their underlying systems have not yet evolved to safely manage AI-accelerated development.

Over-Reliance on AI Outputs

AI can accelerate code reviews and approvals, but, as we explained above, speed doesn’t always equal quality.

That’s why, according to the 2025 State of DevOps Report, 30% of respondents declare little to no trust in the code generated by AI.

The Axify perspective: We believe that this skepticism is healthy and a ‘trust but verify’ approach is a sign of mature adoption.

When engineers trust outputs too quickly, reviews risk becoming shallow. If your teams stop questioning suggestions from GitHub Copilot or Watsonx, subtle defects may slip through undetected.

In the short term, this can mean more bugs in production and higher recovery costs. Over time, however, the greater risk is cultural: as developers grow used to delegating validation, your organization’s engineering discipline may gradually weaken..

Code Churn Risk

One pattern flagged in the report is rising code churn after AI adoption. Some studies found churn nearly doubled when teams leaned heavily on AI-generated suggestions. That means more rework, abandoned code, and wasted effort.

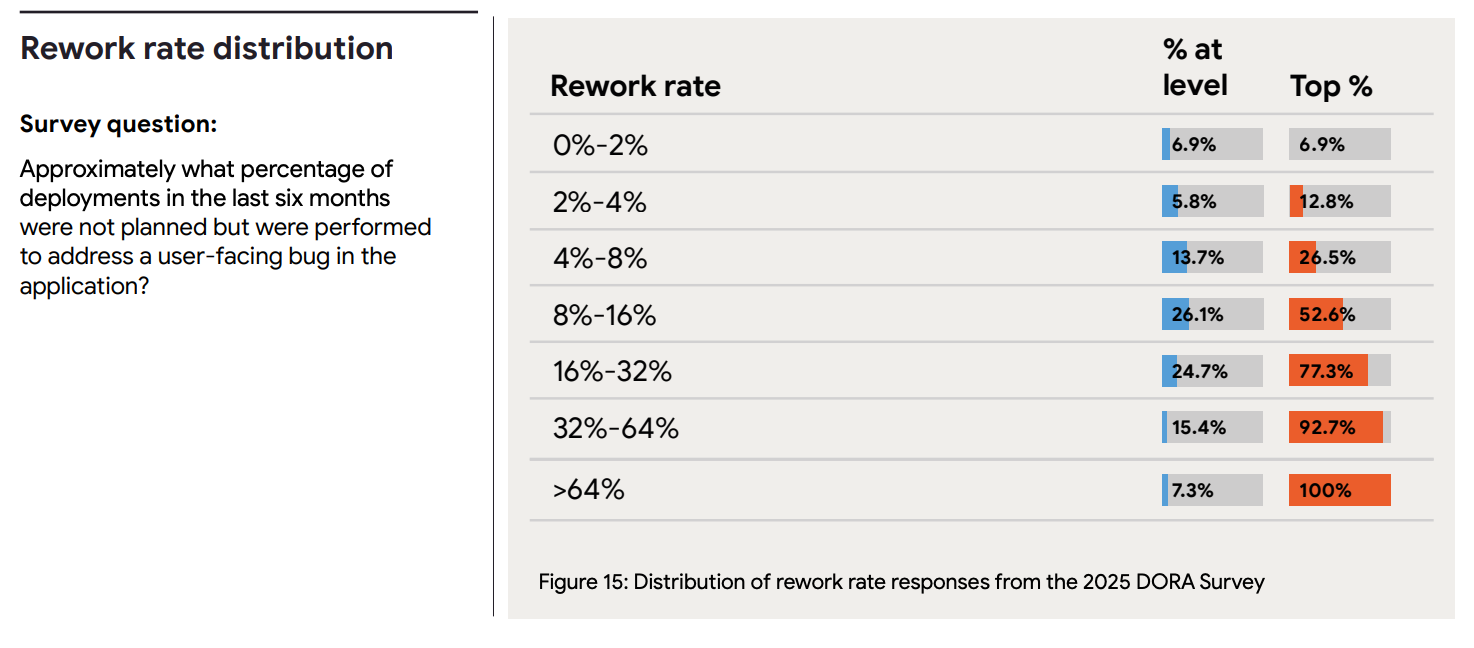

Figure 15 in the 2025 State of DevOps report confirms this.

As you can see, the majority of respondents (roughly 70% of them) have a rework rate of 8-16% and higher. This confirms that unplanned fixes remain common.

The cause usually lies in AI generating plausible but suboptimal solutions that require later fixes. For project managers, high churn inflates cycle time and disrupts velocity forecasting. For executives, it means higher delivery costs without a corresponding rise in value.

Reduced “Valuable Work” Time

AI increases throughput, but DORA research surprisingly found that developers usually spend less time on what they perceive as “valuable work.”

This is the vacuum hypothesis. AI clears low-level tasks, but if organizations don’t redirect the freed-up time to strategic work, the gains vanish into unstructured overhead.

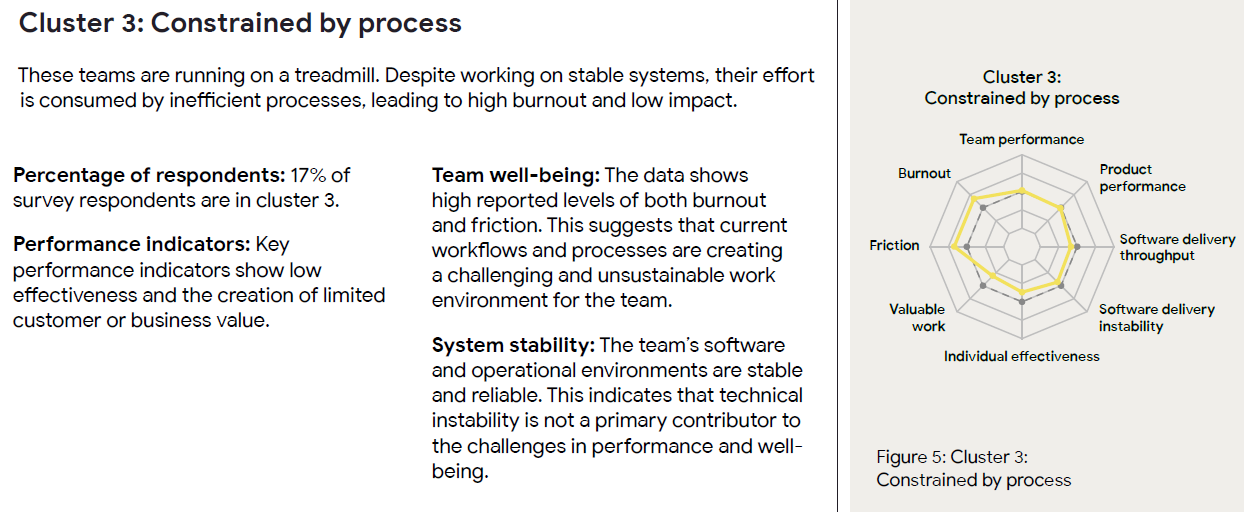

Note: The 2025 State of DevOps data still supports the “vacuum hypothesis,” if we refer back to its definition of clusters. As we mentioned earlier, these clusters represent team archetypes that group organizations by shared performance patterns. Some clusters (e.g., Constrained by Process) show low valuable-work scores despite stable systems, meaning people feel busy but not impactful.

This is a management challenge. Without intentional effort to align AI productivity with roadmap goals, your team risks filling the vacuum with busywork that doesn’t move business metrics.

But there’s another layer to this. More throughput doesn’t automatically mean better output. AI can produce more code, sure, but that code still needs validation. Plus, prompting AI isn’t free: you’re spending mental energy figuring out what to ask, refining inputs, and guiding it toward the right result. So while AI can speed things up, it still takes human effort to steer and clean up after it.

Environmental Impact

Scaling AI also comes with an environmental cost. The DORA 2024 report warns that by 2030, AI could drive a 160% increase in data center power demand. For CIOs and CTOs, this translates into rising infrastructure bills and pressure from sustainability commitments.

Higher energy use from model training and inference can become a reputational liability for companies reporting ESG metrics. Higher productivity per engineer may come at the expense of organizational sustainability targets.

Regulatory and Ethical Uncertainty

Legal frameworks for AI-generated code are still immature. Questions remain about ownership, liability, and compliance. If your AI assistant generates code derived from unlicensed sources, you may face intellectual property risks.

On top of that, security and privacy regulations may tighten around AI-assisted tooling. For executives making investment decisions, this uncertainty means delayed ROI realization and the potential for costly rework when regulations shift.

Bias in Outputs

AI models inherit patterns from their training data, including harmful biases. That can show up in auto-generated recommendations, accessibility features, or prioritization models.

If you rely on biased outputs for design or testing, you risk alienating users or embedding discriminatory practices into products. The reputational and compliance risks are significant.

Security Issues

Attackers are also exploring how to exploit AI systems. For instance, adversarial prompts can manipulate code assistants into suggesting insecure patterns or exposing sensitive data. If teams adopt these outputs without review, they risk introducing vulnerabilities.

The financial and reputational damage from a single breach can easily outweigh the productivity gains of unchecked AI adoption. That’s why establishing guardrails, review workflows, and monitoring mechanisms is essential when deploying AI at scale.

Job Displacements

AI doesn’t replace developers—it redefines their role. As routine coding becomes automated, the emphasis moves toward system design, architecture, and the responsible use of AI within engineering teams.

For engineering leaders, this means rethinking career paths.

The DORA 2025 report also frames AI as requiring new skills and cultural adaptation:

“Your training programs should focus on teaching teams how to critically guide, evaluate, and validate AI-generated work, rather than simply encouraging usage.”

In truth, some roles may shrink, which may lead to morale challenges and higher turnover.

And for executives, unplanned displacement carries reputational risks if employees see AI as a threat rather than a tool.

That’s why proper skilling and even reskilling are so necessary.

How to Implement AI in Your Software Development Team

When assessing the impact of AI, Axify focuses on two main dimensions: Adoption and Impact.

- Adoption shows how broadly AI is being used. Metrics like the number of active users, usage over time, and acceptance rate reveal the extent to which your teams are integrating AI into daily work.

- Impact validates whether those investments translate into value. Metrics that reflect this are faster delivery, better quality, and more predictable outcomes.

As you increase adoption, you want to confirm that delivery time shortens and stability remains steady, too. Axify helps you connect those dots through three key capabilities:

- DORA metrics: These industry-standard indicators (deployment frequency, lead time for changes, change failure rate, and failed deployment recovery time) quantify how AI affects both speed and stability. They help you prove whether faster delivery still preserves stability and quality.

- Value Stream Mapping (VSM): Axify’s VSM dashboard maps your end-to-end software flow, showing where time is lost (e.g., in coding, reviews, testing, or deployment). This visibility helps you identify which parts of the workflow benefit most from automation or AI assistance.

- AI Performance Comparison feature: This upcoming feature will measure and compare your team’s performance with and without AI, clearly showing its impact on delivery time, DORA metrics, and other key indicators. You’ll gain a data-driven view of how AI adoption affects real-world outcomes.

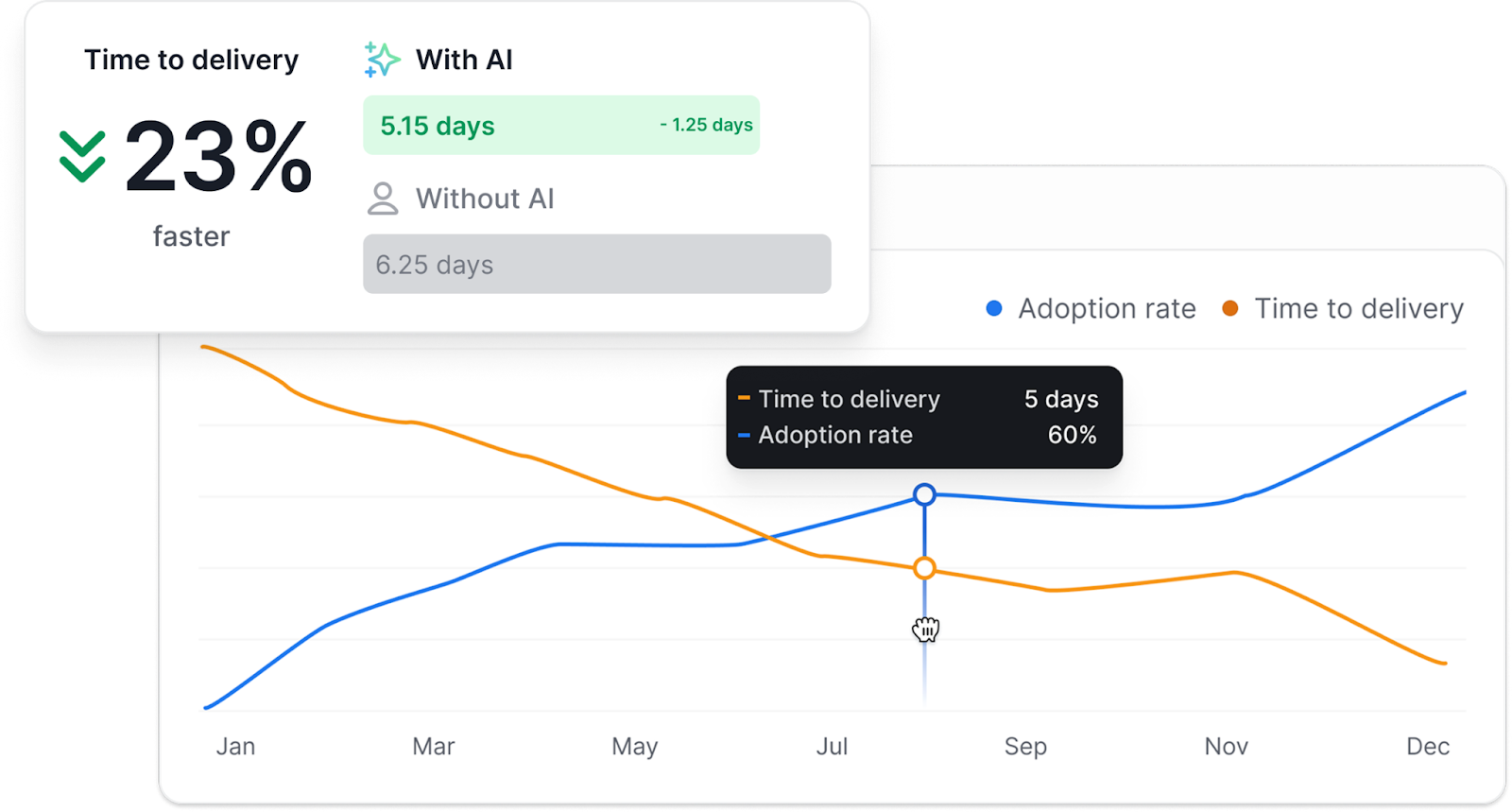

Here’s a sneak peek at our AI Performance Comparison tool:

The chart below shows a 23 % faster delivery time with AI. As the adoption rate (blue line) rises, delivery time (orange line) decreases. That’s visual proof that responsible AI adoption can accelerate delivery without compromising quality.

Step 1: Identify Your Needs

Start by finding the areas where AI could add the most value. Use Axify’s Value Stream Mapping to see bottlenecks in your delivery pipeline (whether in code reviews, testing, or planning). This way, you’re solving a measurable problem and not just experimenting with new tools.

Step 2: Start with Your Strongest Teams and Measure Impact

Because AI acts as an amplifier, begin with the teams that already demonstrate solid engineering practices.

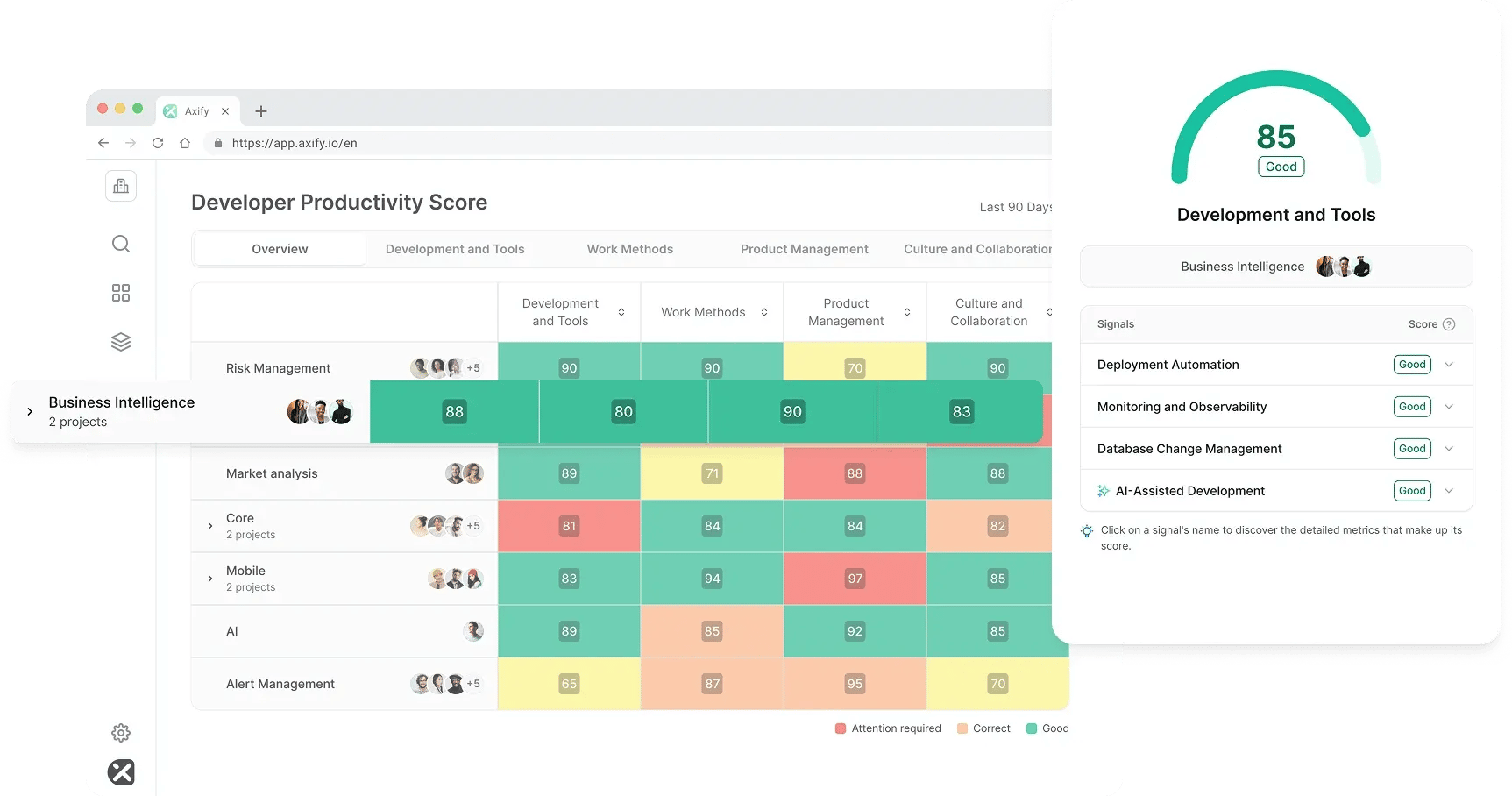

Use Axify’s Developer Productivity Assessment to identify them across the four pillars of performance (Development and tools, Ways of Working, Product management, and Culture & Collaboration).

Once pilots are running, track delivery time, throughput, work-in-progress, and merge speed alongside adoption and acceptance rates. These indicators reveal whether AI is genuinely improving your outcomes.

Axify’s analytics connect these dots automatically, showing how delivery speed and stability evolve as AI usage grows.

For example, the dashboard below gives each team a data-driven productivity score based on key signals such as automation, observability, deployment frequency, and collaboration quality. This helps you see which teams have the foundations to adopt AI effectively.

Step 3: Scale & Sustain

If the pilot shows gains, expand adoption. Establish governance for data use, bias monitoring, and security reviews, but also share success stories from high-performing teams to encourage healthy competition and learning.

Keep measuring long-term performance using DORA and VSM metrics, too. That’s how you make sure that each new wave of adoption continues to improve both speed and quality.

Future of Software Engineering with AI

AI will continue to shape how you work, but the biggest changes won’t come from tools alone. They will come from shifts in roles, skills demand, culture, and how AI becomes seamlessly embedded in your day-to-day workflows. Here are the areas where you’ll see the most impact.

AI and Software Engineering Jobs

More hybrid roles will emerge. You’ll need AI literacy the same way you need Git literacy today. Engineers who can guide and supervise AI will have a competitive edge.

“The future of AI is not about replacing humans, it’s about augmenting human capabilities.”

- Sundar Pichai, CEO of Google

Software Engineer Future Demand

Routine coding tasks will continue to decline in value. Demand will grow for system design, architecture, and AI oversight. If you don’t adapt, you risk being limited to low-value work.

Engineering Culture

Teams will focus on creativity, experimentation, ethical responsibility and effectiveness. AI removes routine friction, which means culture shifts toward higher-value collaboration and accountability.

Tools Get Invisible

AI will no longer feel like an add-on. It will embed itself into IDEs, CI/CD pipelines, and project management tools. For you, this means less context switching and more seamless integration across the development process.

Turning AI Potential into Measurable Outcomes

AI changes how you code, plan, and collaborate, but the gains can come with tradeoffs. Some studies show clear improvements in productivity and quality, balanced against risks like higher churn and lower stability.

To capture the benefits without losing control, you need visibility across your engineering flow. Axify gives you that baseline and helps you track real impact.

Book a demo today to see how your teams can measure and improve with confidence.

.png?width=60&name=About%20Us%20-%20Axify%20(2).png)

-3.webp?width=800&name=Axify%20blogue%20header%20(9)-3.webp)