Are you struggling with inconsistent workflows, buggy releases, or delays in delivering features? These challenges can slow down your team and impact the quality of your software.

The good news is that following the right software engineering best practices can solve these problems and set your team up for success.

If you’re wondering what these practices are and how they can help, you’re in the right place. Let’s dive into the essentials that will streamline your processes and collaboration throughout your software development lifecycle.

How to Measure Your Software Engineering Best Practices

When measuring software engineering best practices, understanding what to track and how to use that information is critical. Let’s break down how you can effectively measure your team’s efforts and refine their practices:

You Need the Right Metrics

Metrics are a window into your team’s performance, offering tangible insights into what’s working and where improvement is needed. Frameworks like DORA (DevOps Research and Assessment) are invaluable for evaluating overall performance.

DORA, flow, or S.P.A.C.E. metrics do not just measure activity output. Instead, metrics from such frameworks also highlight the quality of your software development processes and the efficiency of the team of software engineers.

As a result of focusing on outcomes, your engineering teams can:

- Improve software quality

- Streamline development processes

- Deliver consistent value to stakeholders

Focus on the Continuous Delivery Journey

Continuous software delivery is not an overnight transformation but a gradual evolution that requires discipline, strategy, and adherence to best software development practices. It’s a journey of systematically improving deployment techniques to achieve seamless integration with the production environment while ensuring high-quality, reliable software delivery.

Automation, testing, and decreasing the size of the releases are the best steps to start with.

“We are uncovering and mitigating problems in our processes that impact quality while automating manual steps that take time away from development. This in turn gives us more time to focus on the product goals and additional delivery flow improvements. The outcomes of this are dramatic improvements to the team culture, engineering excellence, and business goals.”

- Minimum CD

11 Metrics to Track Your Software Engineering Best Practices

Use the following metrics to track progress, optimize processes, and ensure your software engineering best practices are aligned with your goals:

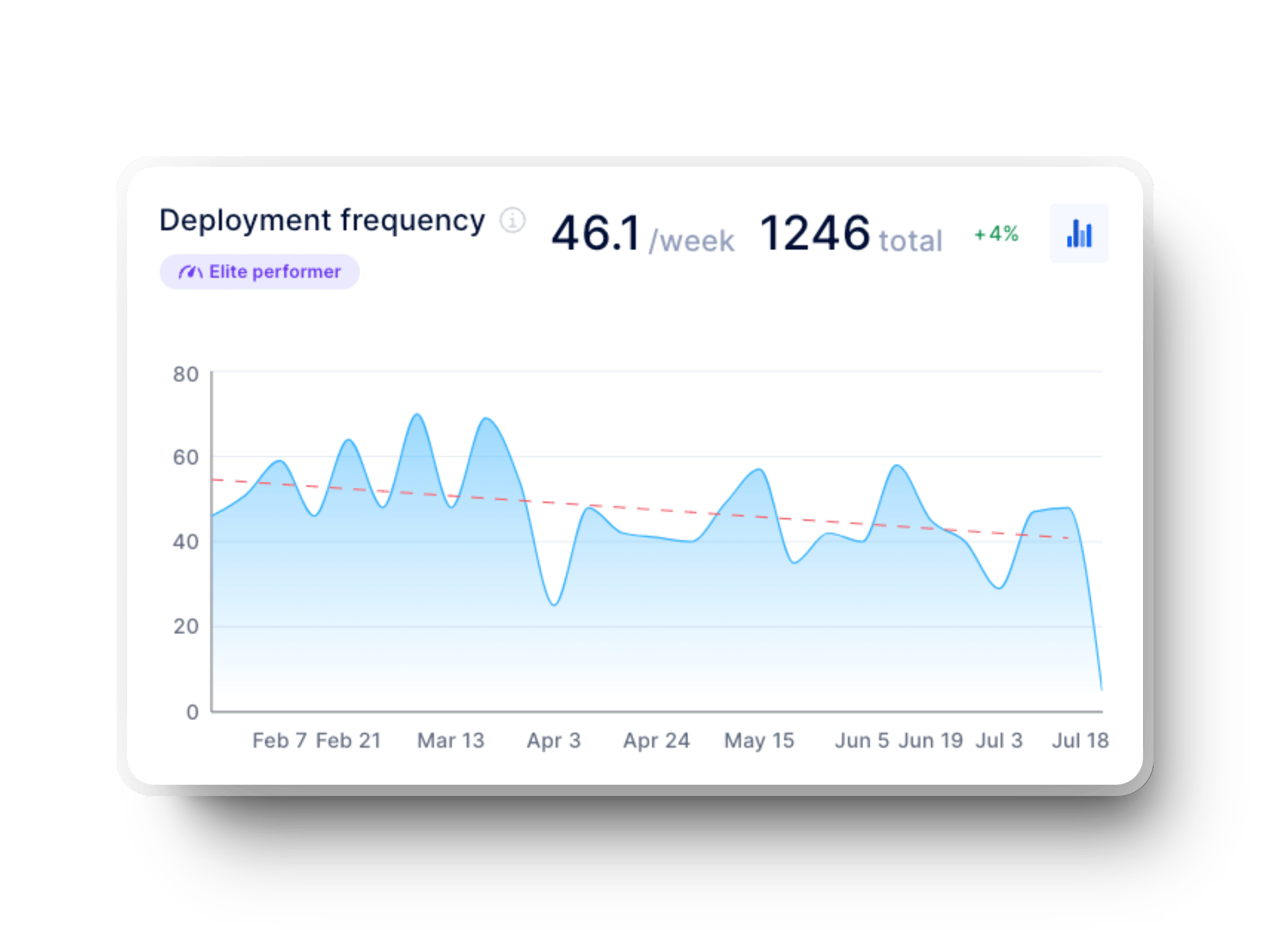

1. Deployment Frequency

Deployment frequency measures how regularly your team deploys updates to production. Think of it as a pulse check for your continuous deployment pipeline. The more frequently your team can deploy, the more agile and responsive they become, which makes it easier to deliver value to your users without unnecessary delays.

2. Lead Time for Changes

Lead time for changes tracks how long it takes from when a change on the software code is committed to when it’s live in production. If your team’s lead time for changes feels longer than it should, it might be worth revisiting your approach to QA, bug fixes, or even your coding standards. A shorter lead time for changes means you can adapt to user feedback faster, something every team strives for.

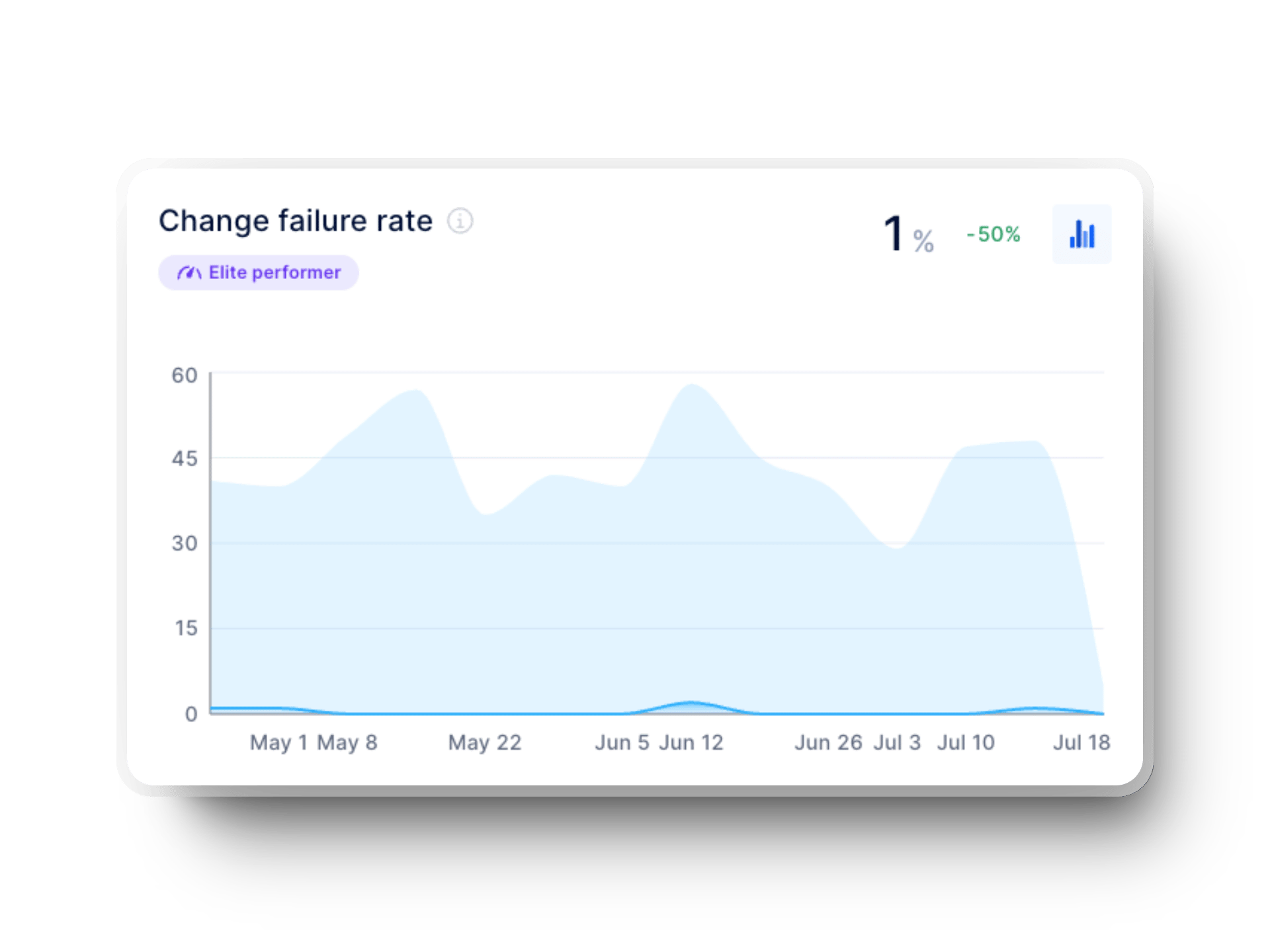

3. Change Failure Rate

Nobody wants deployments to fail, but tracking the percentage that does gives you critical insights into your software quality. A lower change failure rate shows that your team has effective types of testing like unit testing and integration testing in place. It’s also a sign that your focus on creating secure and reliable code is paying off.

4. Failed Deployment Recovery Time (MTTR)

Failed deployment recovery time (formerly MTTR) measures how quickly your team can bounce back from incidents in production. Quick recoveries aren’t just about fixing problems; they minimize user disruption. If you’re hitting minimal time for recovery, you’re likely leveraging effective bug-tracking software and keeping your production environment resilient.

%20in%20axify%20for%20software%20development%20teams.webp?width=1920&height=1440&name=time%20to%20restore%20service%20(dora%20metrics)%20in%20axify%20for%20software%20development%20teams.webp)

5. Availability or Up-Time

Uptime represents the percentage of time a system is fully operational under normal conditions. It’s a key indicator of the reliability of a system, solution, or infrastructure and typically refers to a computer. On the other hand, availability measures the time a system is accessible and functional. Availability incorporates uptime as well as scheduled maintenance. This metric provides a more comprehensive view of service reliability because it considers active operation alongside planned downtime, which is critical for remote teams.

That said, the availability and up-time of your operating systems speak volumes about their reliability. These metrics reflect how well your team handles load balancing, security vulnerabilities, and other potential issues. Users might not say it outright but always notice when things work.

6. Cycle Time

Cycle time shows how long a task takes from “In Progress” to “Done.” If this number is high, it might signal bottlenecks in your development process. Shorter cycle times mean your team stays focused, manages priorities well, and moves features or fixes across the finish line efficiently.

7. Time to Market

Time to market, also known as time to delivery in Axify, shows how quickly your team can turn ideas into working software products. Delivering new features or improving existing ones faster helps you stay ahead in the competitive world of software development. It’s all about taking those business goals and turning them into tangible solutions that your users can interact with and value.

8. Throughput

Throughput measures how much your team gets done in a sprint. In Axify, we measure throughput by looking at completed story items, not story points.

So, if you’ve ever wondered, “How much can we realistically accomplish in this cycle?” this is the metric to watch. Planning based on throughput helps avoid overcommitting and ensures you’re making steady, predictable progress.

Besides, throughput depends on cycle time; a shorter cycle time leads to a higher throughput.

9. Pull Request (PR) Review Time

PR review time highlights how long it takes for the submitted code to be reviewed and merged. If reviews are dragging on, it can slow everything down. Thus, it’s crucial to streamline this process, boosting collaboration among dev teams and keeping your software projects moving forward.

-1.webp?width=1920&height=634&name=pull%20requests%20cycle%20time%20breakdown%20graph%20in%20axify%20%20(1)-1.webp)

A Medium article emphasizes maintaining shorter PR review times:

“As part of a larger process, pull requests should really be optimised to be completed within 24 hours. We should (be) optimising each part of the development process including the review time to be optimal in terms of flow. You don’t want to end up with work queued, effectively bottlenecked and waiting to be completed.”

- Jonathon Holloway, Analyse Your Pull Request Review Time

10. Work in Progress (WIP)

WIP tracks how many tasks your team is juggling at once. Too much WIP can stretch your team thin and slow things down. The focus should be on keeping this metric manageable to minimize context switching so your teams can deliver on business goals effectively.

%20in%20Axify.webp?width=1920&height=1440&name=work%20in%20progress%20(WIP)%20in%20Axify.webp)

11. Customer Satisfaction (CSAT)

CSAT reflects what really matters: how your users feel about your product. A boost in customer satisfaction scores tells you that your software engineering efforts are aligned with customer needs. It’s proof that the time you’re investing in quality software development standards and process improvements is making a real difference.

10 Best Software Engineering Practices To Improve Your Metrics

The following software engineering practices drive measurable improvements across your business metrics. We’ve tested them as well and know they work. Here’s what to consider:

1. Version Control and Branching Strategies

A solid version control system like Git, paired with trunk-based development, keeps your codebase organized and your workflow smooth. Instead of dealing with messy merge conflicts or hunting for errors in previous versions, your team can focus on what matters, i.e., writing clean, efficient code.

Besides saving development time, a clear branching strategy also makes sure everyone knows exactly where to commit their changes and how to collaborate effectively. When rollbacks are needed, they’re quick and painless, which keeps your momentum intact.

Metric impact: This approach leads to faster lead times as changes are integrated more frequently and without lengthy delays. It also results in smoother and faster deployments by ensuring incremental updates are stable, predictable, and less prone to errors.

2. Automated Testing

A strong software testing framework is your first defense against bugs and errors. With a mix of tests, including unit, integration, and end-to-end tests, your team can catch issues early and ensure the code is reliable before it moves further down the pipeline.

Automation takes this further by eliminating the need for repetitive manual testing. This gives your team more time to focus on building features rather than fixing last-minute surprises.

Metric impact: With this practice, you can reduce change failure rates by identifying potential problems before they hit production. Moreover, recovery times improve significantly since most issues are caught and resolved during development.

3. Continuous Integration/Continuous Deployment (CI/CD)

A well-implemented CI/CD pipeline keeps your development process efficient and predictable. The key is to automate steps to:

- Eliminate manual errors

- Speed up the flow of updates

- Ensure consistent releases

This setup lets your team focus on building high-quality, secure software rather than worrying about deployment challenges.

Tools like Axify simplify the process by providing actionable feedback that helps catch bottlenecks early in your process.

Metric impact: Consequently, your team can minimize manual mistakes, improve deployment frequency, and boost response times to system failures.

4. Code Reviews and Peer Collaboration

Peer review is a vital part of maintaining high-quality standards and provides an opportunity for your team to work better together. A clear and structured review process does more than fix bugs; it creates space for software developers to share knowledge, align with project goals, and strengthen the development lifecycle.

Metric impact: Pull requests move through faster, throughput improves, and collaboration feels more seamless. This keeps your workflow efficient and ensures your codebase stays consistent and reliable.

5. Documentation and Knowledge Sharing

Clear and up-to-date software design documentation keeps your team aligned and reduces time wasted on repetitive questions.

It serves as a single source of truth. That means new team members can onboard faster, developers can debug issues without unnecessary guesswork, and project managers can track progress more clearly.

Metric impact: Improved documentation increases team capacity, shortens lead times for changes, and ensures your team solves meaningful challenges more than revisiting avoidable problems.

6. Infrastructure as Code (IaC)

Managing infrastructure can feel chaotic without a consistent approach, but applying the same precision as the application code changes everything. Tools like Terraform, Ansible, and Pulumi let your team define infrastructure as code to ensure configurations remain consistent and repeatable.

Metric impact: This step leads to reduced MTTR, smoother scaling, and a more dependable system ready to handle whatever comes next.

7. Monitoring and Observability

Real-time monitoring and logging give your team the visibility they need to stay ahead of potential issues. Tools like Prometheus, Grafana, and ELK Stack make catching and fixing problems easier before they become larger disruptions.

Observability adds another layer of confidence by allowing your team to monitor, measure, and understand the internal states of a software system based on its external outputs.

Pro tip: Axify’s Value Stream Mapping tool shows bottlenecks in your workflow and, hence, where tools like these would have the most impact.

Metric impact: This approach reduces change failure rates and MTTR by providing teams with clear visibility into system performance and enabling quick detection of issues. Improved uptime is another benefit that ensures user expectations are met consistently.

8. Feature Flags and Progressive Delivery

Rolling out features doesn’t have to be all-or-nothing. Incremental releases provide a safer and smarter way to introduce updates without risking system stability. Feature flags make this possible by letting teams separate deployments from releases.

This means new features can be tested with smaller user groups first, which gives you valuable feedback before rolling them out widely.

Metric impact: Deployment frequency improves as updates are rolled out seamlessly. It also reduces risks during releases and lowers change failure rates. Furthermore, this process allows teams to validate features in a real-time development environment.

9. Refactoring and Technical Debt Management

Technical debt slows progress and creates unnecessary risks. The key is to address it regularly through refactoring, improving the internal structure of code without changing its external behavior.

This ensures your codebase remains clean, maintainable, and ready for future updates. Moreover, when technical debt is under control, the software systems engineering processes stay efficient, and software development projects move forward without interruptions.

Metric impact: Lead times for changes decrease, defect escape rates improve, and the entire software architecture becomes more resilient and scalable.

10. Team Retrospectives and Feedback Loops

Team retrospectives are a great way to step back and look at what’s working and what isn’t. They allow your team to reflect on workflows, tackle bottlenecks, and figure out how to work smarter, not harder.

These sessions are about creating a continuous improvement culture that keeps everyone moving in the same direction.

Metric impact: You’ll see smoother workflows, better team alignment, and noticeable throughput and deployment frequency improvements.

Aligning Best Practices with Metrics at Each Stage of Continuous Delivery

One effective strategy to get the most out of software engineering best practices is to align the metrics step-wise with continuous delivery.

Let’s see how:

1. Initial Stage

This stage focuses on establishing version control systems like Git so developers can work collaboratively without conflicts. In addition, pairing this with a simple CI/CD pipeline ensures a consistent workflow and prepares the team for rapid iterations.

2. Improvement Stage

Once the foundation is set, the improvement stage emphasizes automation to improve speed, reliability, and quality. This applies to unit tests, integration tests, and deployments to catch errors early. Automated test-driven development also reduces friction, so releases are frequent and stable.

3. Optimization Stage

At the optimization stage, metrics highlight inefficiencies in pull request approvals, testing cycles, or deployment pipelines. This part involves refining workflows, resolving bottlenecks, and improving resource allocation to keep everything running smoothly.

4. Continuous Stage

The foundation is strong, automation is in place, and bottlenecks have been addressed, but the work doesn’t stop there. Maintaining agility means continuously evaluating your processes to ensure they adapt to new challenges without compromising efficiency.

Note: While these stages provide a helpful framework for understanding the journey of continuous delivery, Agile methodologies often tackle them simultaneously.

How to Measure Progress

To measure progress, follow the below tips:

- Track bottlenecks: Identifying delays is critical to improving the software development life cycle. Tools like Axify help your team detect where workflows are slowing down. You must address these bottlenecks for a smoother process and effective software project management.

- Measure success: Actionable metrics like DORA clarify what’s working and what needs attention. They give your teams a clear picture of the health of software development processes so they can identify opportunities for improvement.

- Iterate continuously: Progress isn’t about perfection but constant refinement. Regular retrospectives help teams reflect on their workflows and address areas where metrics have stagnated.

Tips to Keep Teams Focused on Metrics-Driven Best Practices

Some expert tips to keep your teams focused on metrics-driven best practices include:

1. Make Metrics Visible

Dashboards provide a clear view of team performance and keep everyone aligned. When crucial metrics are visible in an easy-to-understand way, teams can track progress and stay focused on improving the software development process.

2. Start Small

Improving everything at once isn’t realistic. Start with one metric, like reducing lead times or improving change failure rates, and build from there. Small wins create momentum and encourage your team to stay committed.

3. Create a Metrics-Driven Culture

Tie team goals directly to measurable outcomes. When development teams see how their work impacts metrics, it builds a sense of accountability and purpose.

4. Encourage Experimentation

Give teams the freedom to test new tools and refine workflows. Experimenting with solutions like automated testing or real-time monitoring helps uncover better ways to optimize the SLDC.

Scale Your Software Engineering Best Practices with Axify

Software engineering best practices go beyond ensuring clean and maintainable code. They provide the foundation for improving key metrics and achieving seamless, continuous delivery.

Whether through effective code reviews, real-time monitoring, or refining workflows during retrospectives, these practices keep your development team aligned with business objectives and committed to delivering high-quality software.

Ready to take the next step? Start tracking, analyzing, and improving your metrics with Axify. Book a demo now!

.png?width=60&name=About%20Us%20-%20Axify%20(2).png)