Every year, I see more teams chase faster delivery, tighter AI integration, and higher performance metrics. Yet, speed typically hides fragility.

I’m Alexandre Walsh, VP of Engineering at Axify, and I’ve learned that automation doesn’t fix broken systems. Instead, it simply amplifies what’s already there. When your foundation is solid, AI helps you scale. When it’s not, the cracks widen fast.

In this article, you’ll analyze the key shifts in the 2025 DORA data and compare how AI reshapes delivery maturity. You’ll also see how to align those insights with real performance improvement.

First, let’s clarify what the State of DevOps Report actually represents and why it still matters.

What Is the State of DevOps Report?

The State of DevOps report is DORA's annual research study that analyzes how engineering teams strengthen software delivery performance and organizational outcomes. Each year, it identifies the practices that drive measurable improvement in stability, throughput, and culture.

It also examines the current state of DevOps adoption, future trends, what top-performing teams are doing differently, and which bottlenecks still slow organizations down.

I also see it as a good lens through which to understand your engineering maturity.

You’ll find plenty of ideas to test how well your systems, feedback loops, and leadership choices hold up under pressure.

And, I’m not the only one who sees it this way. Others in the industry share a similar view on how DevOps must evolve:

“The annual State of DevOps Report reinforces the importance of learning how to deliver technology differently in today’s world - from how you utilize open source to implementing cloud to best practices around testing, change management, and security.”

- Bill Briggs, CTO at Deloitte Consulting LLP

Since 2024, the focus has shifted toward AI-assisted software development. So in this year’s report, you'll look closely at how artificial intelligence changes delivery speed, reliability, and developer well-being.

This latest edition is named the "2025 DORA State of AI-Assisted Software Development Report" and it connects AI capabilities directly to long-term system health.

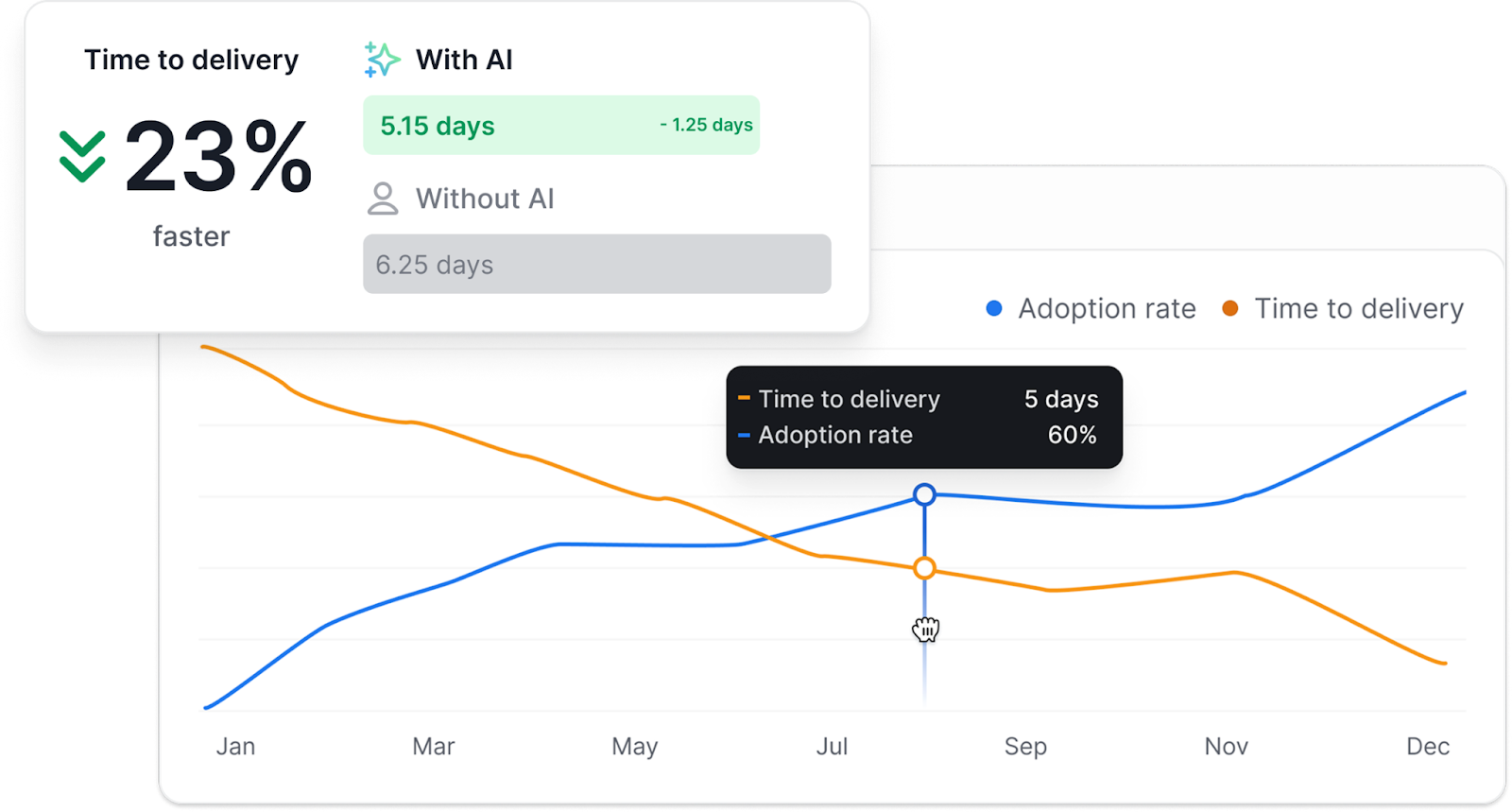

Pro tip: Try the Axify AI Impact feature to compare the effects of AI directly before and after implementation. It will help you see whether your adoption curve translates into lasting, system-level improvement.

Just look how intuitive it is:

Now that we’ve set the context, let’s look at who produces this research and why that matters for you.

Who Produces the State of DevOps Report?

The State of DevOps report is produced by DevOps Research & Assessment (DORA), now part of Google Cloud. Each year, it gathers insights from engineering teams worldwide to track how delivery practices evolve and influence organizational performance.

In the 2024 State of DevOps report, the research has captured feedback from more than 39,000 professionals. Meanwhile, the 2025 DORA report has drawn on nearly 5,000 new survey responses across industries and regions.

That scale gives the data rare credibility, because it reflects how delivery health shifts across real environments instead of theory.

Next, let’s see how the data is gathered and analyzed.

Methodology of the Report

The 2025 DORA Report draws on thousands of responses and over 100 hours of interviews to uncover how teams apply AI tools and automation in real software development environments. Like past editions, it combines global survey data with in-depth interviews, building on over a decade of cumulative research.

What’s new is DORA’s expanded focus on AI-assisted development and its use of cluster analysis to identify seven team archetypes. These new profiles replace the previous four-tier performance model. They reveal patterns between delivery practices and system outcomes.

With the foundation clear, we can now focus on what the 2025 findings reveal about performance shifts and system maturity.

Key Insights from the State of DevOps 2025

The 2025 DORA report marks a turning point for engineering teams. AI has become a more important part across software delivery, but its impact depends on system maturity and cultural readiness.

As in all previous years, I appreciate that the current data doesn’t just describe change. It explains why some teams accelerate while others stall, by defining performance models and offering actionable insights.

For us at Axify, this year’s report validates how measurement and visibility remain at the heart of sustainable performance.

Here are the main insights that matter most to you.

AI Adoption is Near-Universal but Uneven in Maturity

Almost 90% of teams now use AI in their daily work, which is a 14% rise from last year. 65% report moderate to high reliance, mainly for code generation, debugging, test creation, and documentation.

In short, AI has become a normal part of development. But here’s the thing: experience matters. Teams that have used AI tools for over a year report more consistent software delivery throughput and less disruption.

By contrast, new adopters usually see instability because their feedback and validation systems lag behind the speed of automation. Because of that, roughly 30% of developers still show little or no trust in AI output.

This tells me maturity depends less on how much you adopt. It’s about how well you control and contextualize it within your system.

To see how others interpret the same findings, here’s a summary from the AI Papers Podcast:

The Impact of AI Adoption (Gains and Tradeoffs)

DORA’s 2025 data supports a truth I’ve witnessed firsthand: if applied wisely in mature organizations, AI can produce measurable improvements. In high-performing organizations, it enhances throughput through faster deployment frequency and shorter lead time. But in weak systems, AI magnifies friction, inconsistency, and delivery risk.

That contrast is striking.

Teams with mature pipelines and automated testing achieve measurable gains in both speed and stability. Meanwhile, others see an uptick in rework, incident response delays, and cognitive overload.

The data also reveals a clear trade-off. AI improves throughput, yet it also raises the change failure rate where feedback loops can’t keep pace.

And there’s a human cost as well.

Burnout, friction, and context switching rise when adoption is rushed. In the end, sustainable improvement happens only when AI deployment follows strong platform engineering foundations.

Customization and Developer Experience

One insight that stands out is how adapting AI to existing developer environments drives a better developer experience. The report notes that AI must meet developers where they already work (inside IDEs, repositories, and workflows).

Teams that tailor AI to their existing internal platforms experience less friction and higher satisfaction.

So, simple configuration changes can lower your team’s cognitive load and improve focus. This includes prompt tuning or repository filtering. When AI setups are rigid or uniform across teams, they only accelerate old bottlenecks.

I’ve seen this play out frequently. Teams without workflow-specific configurations spend more time correcting AI’s mistakes than focusing on valuable work.

The best AI should feel almost invisible. It should be supportive, context-aware, and embedded seamlessly into your daily flow.

The DORA AI Capabilities Model

To make sense of these variations, DORA introduced the AI Capabilities Model, which outlines seven foundational practices that shape AI success.

These include a clear AI policy, a healthy data ecosystem, a user-centric focus, and resilient internal platforms that keep systems stable as automation scales. Other pillars (feedback loops, governance, and literacy) define whether AI becomes an asset or a liability.

The takeaway from this is simple.

AI adoption isn’t a tool-related problem but a systemic challenge. The strongest outcomes occur when:

- Technical and cultural systems align.

- Governance reinforces velocity.

- Platform maturity supports safe experimentation.

In my view, this model gives you a measurable framework for aligning automation with your organizational goals. It helps you focus on long-term performance instead of chasing short-term output gains.

Expanded Performance Measures

You move beyond the traditional four DORA metrics only when you start connecting delivery performance to team health and product outcomes,and that’s the real shift of 2025.

The latest State of AI-Assisted Software Development report highlights that while DORA remains essential, it no longer tells the whole story in an AI-driven workflow. To understand how AI truly impacts teams and users, organizations are now tracking seven complementary signals:

- Team performance – how effectively teams collaborate and adapt to AI-augmented workflows.

- Product performance – whether accelerated delivery translates into meaningful user value.

- Valuable work – the share of effort devoted to high-impact, mission-critical initiatives.

- Friction – bottlenecks introduced by tools, process complexity, or unclear AI integration.

- Burnout – the human cost of faster cycles and continuous AI interaction.

- Delivery instability – volatility in throughput or quality as AI scales.

- Individual effectiveness – the developer’s ability to harness AI responsibly and productively.

These signals reveal whether speed actually translates into resilience and value across the software delivery lifecycle. When you look closer, low friction paired with high valuable work often predicts cleaner handoffs, steadier delivery, and fewer priority resets — the hallmarks of a mature, AI-empowered engineering culture.

This dynamic leads to faster releases and, as a result, bringing value to users sooner.

But the opposite is also true.

Rising burnout paired with quick wins can signal inefficient processes or technical debt piling up behind the scenes. Similarly, delivery instability may expose weak control loops even when dashboards look green.

Put plainly, this extended metric set provides a balanced read on system stability, helping leaders anchor objectives, guide staffing decisions, and time platform investments with confidence.

Seven Team Profiles

In previous DORA reports, teams were categorized into four performance levels: Elite, High, Medium, and Low. This classification was based on the four DORA delivery metrics: deployment frequency, lead time for changes, change failure rate, and time to restore service.

This framework is useful for benchmarking DevOps maturity across the industry, but over time it began to oversimplify how modern teams operate. Many organizations found they didn’t fit neatly into a single tier, and “chasing elite status” sometimes led to superficial comparisons rather than meaningful improvement.

The 2025 DORA report shifts focus with seven new team profiles. This new framework assesses delivery performance, as well as cultural and human signals.

The point is to gain clarity on where you stand, so you can improve one pattern at a time. That’s why the new model emphasizes progress and context over competition.

The new seven profiles are:

- Foundational challenges

- Legacy bottleneck

- Constrained by process

- High impact–low cadence

- Stable and methodical

- Pragmatic performers

- Harmonious high-achievers

What matters is the mix of traits rather than the performance label.

For example, High Impact–Low Cadence teams show strong outcomes but uneven operations performance, a pattern that often hides thin on-call coverage or slow approvals cycles.

Constrained by Process teams, by contrast, wrestle with excess coordination cost and brittle cultural practices, which erode their ability to adapt or scale AI practices effectively.

At the other end of the spectrum, Harmonious High-Achievers pair steady throughput with low burnout, balancing speed and sustainability through tight feedback loops and shared ownership.

And you can check out the other four teams in the 2025 DORA report – I won’t spoil the surprise for you.

So, once you map your teams, compare gaps across stability, friction, and valuable work. That context will help you target the smallest change with the biggest compound effect.

Value Stream Management as the Missing Link

AI may speed local work, but value is likely to stall if you lack a system-wide view. That’s why I advise using value stream management to connect throughput to outcomes.

You can start by mapping the idea-to-production process, including CI/CD pipelines, approvals, and release policies. Then correlate flow metrics (cycle time, throughput, WIP, etc.) against customer signals such as defect escape rate or adoption.

Here’s why that’s important.

Let’s say your local coding speed increases but cycle time flattens. This means you can check for a constraint in testing, change control, or during software releases. VSM makes those delays explicit and shows where automation practices pay off versus where human coordination remains the real throttle.

In the end, you can use that picture to implement AI where it’s more needed: where queues form rather than where activity already flows. That’s how you turn local acceleration into business impact.

Platform Engineering as the Foundation

If you want AI to scale safely, you should invest in platform maturity first. From what I’ve seen, the pattern is consistent: self-service environments, paved paths, and strong guardrails stabilize outcomes under load.

In other words, when the platform is stable, AI becomes an accelerant rather than a risk multiplier.

To get there, treat your platform as a product with SLAs for environment creation, safe defaults, and golden paths for infrastructure as code. Then, bake in policy, secrets management, and runtime controls so teams can move as soon as changes are validated.

At the same time, you can pair this with baked-in observability and fast rollback to protect system stability as generative AI increases change volume.

And here’s where the real payoff shows: quieter incident queues, cleaner upgrades, and fewer late-night interventions.

So, track it. Measure how standardized pipelines shift failure modes from production rollbacks to earlier detection. That’s the difference between isolated wins and durable acceleration.

How to Apply the Report + Axify’s Role

The 2025 findings are only valuable if you can operationalize them. At Axify, we’ve seen that data without context doesn’t drive change... it stalls it. Here’s how I suggest you turn the report’s insights into action that improves delivery stability, team health, and measurable business outcomes.

1. Benchmark Your Teams Against the New Measures

Start by moving beyond the four DORA metrics. They still help you assess delivery speed and quality, but they don’t capture the human and systemic dimensions of delivery.

In the 2025 DORA model, friction, burnout, and valuable work are equally important indicators of sustainable performance.

That’s where Axify helps.

We benchmark these expanded signals automatically by helping you track workflow stability, team satisfaction, and delivery flow across your repositories, issue trackers, and CI pipelines.

The advantage is perspective.

When you can visualize where friction builds up, or which teams are nearing burnout, you can act before it turns into churn or quality loss. This approach aligns with DORA’s seven archetypes.

Instead of chasing “elite” status, you understand where your teams fit and which small, measurable improvements matter most. Now, data becomes diagnostic rather than decorative.

2. Interpret AI Adoption Critically

AI adoption is, indeed, widespread, but the real differentiator is how you deploy it. I, for example, remind teams that AI can scale both strength and dysfunction. That’s because rolling it out without strong governance or reliable data pipelines creates instability faster than it creates efficiency.

At Axify, we’ve learned this firsthand.

We’ve seen the best results when AI adoption happens inside a well-governed ecosystem. This includes one where validation, review, and observability are already in place. In those setups, the system absorbs change instead of increasing risk.

Our dashboards make that balance visible. You can see when throughput increases, but so does time spent on bug fixes or reopened work. Also, you can track whether faster releases correlate with more failed deployments or longer recovery times.

Our failed deployment recovery time (DORA metric) can help you see that clearly:

Correlating AI-driven output with quality, flow, and burnout allows you to see whether the technology improves real productivity or just accelerates chaos. Ultimately, the goal is to sustain velocity without eroding trust or reliability.

3. Customize for Context

The report is clear that general AI strategies don’t work for everyone. Every team has different workflows, toolchains, and maturity levels.

That’s why I’ve learned that forcing uniform adoption usually increases frustration rather than results. Instead, the smarter approach is to help your teams configure AI tools in their environment. IDE integrations, repository configurations, and on-demand workflows should all reflect how they already work.

When AI fits the team’s rhythm, it reduces friction and strengthens flow. But when it’s imposed, it magnifies bottlenecks. So, context is everything, and customization is how you protect both speed and sustainability.

4. Build Continuous Feedback Loops

Every improvement system we’ve built starts with a cycle: measure → experiment → iterate. That’s the discipline DORA supports, and it’s where Axify helps teams create a habit. Because without that structure, improvement stays reactive rather than intentional.

To make this process tangible, we visualize the loop through dashboards that track flow, stability, and human signals like culture and collaboration. With that visibility, you start seeing cause and effect after every change.

I typically suggest starting small, like a workflow tweak, a new deployment policy, or a shift in review cadence. The key is consistency, so you'll need to measure outcomes instead of intentions.

And once something works, amplify it. When it doesn’t, learn and pivot.

5. Tie Performance to Business Outcomes

It’s easy to celebrate AI productivity gains such as shorter cycle times and faster commits. But unless they translate into delivering value faster to your users, they’re just movements without progress.

What you really need is traceability between engineering performance and business outcomes. This is where value stream management becomes important again.

At Axify, we help teams connect software delivery data with outcomes that matter to them. Our value stream mapping dashboard visualizes flow and performance trends. This shows where increased speed drives progress and where it may add friction. For instance, faster deployments can sometimes expose weak quality gates or delayed feedback loops.

Also, you can combine Axify’s delivery metrics with your own business KPIs or team productivity data to understand how process changes translate into real impact. That’s how you tell whether acceleration is improving outcomes or just shifting the bottleneck elsewhere.

Also, you can combine Axify’s delivery metrics with your own business KPIs or team productivity data to understand how process changes translate into real impact. That’s how you tell whether acceleration is improving outcomes or just shifting the bottleneck elsewhere.

The organizations that outperform focus on sustained, measurable improvement in the outcomes that matter most.

6. Invest in Platform Engineering as a Backbone

AI and DevOps scale only when the foundation supports them. Without a stable, governed, and self-service infrastructure, you’re just multiplying risk.

Platform engineering creates that foundation. It standardizes environments, enforces compliance, and gives developers the autonomy to build without bottlenecks.

I’ve seen teams reduce cognitive load and incident frequency just by introducing a mature internal platform that manages automation, access, and observability. Axify integrates seamlessly into this ecosystem by collecting data from your CI/CD pipelines, repositories, and issue trackers to visualize delivery performance in real time.

This helps you see how platform improvements translate into greater delivery predictability and stability. When your systems provide consistent data and fast feedback, AI initiatives have the structure they need to scale without adding risk.

Treat the platform as a product by investing in it like you would in a customer-facing service. Because that’s the layer on which every other improvement depends.

DORA State of DevOps 2025 vs. State of DevOps 2024

The 2025 DORA findings mark a turning point. While 2024 focused on efficiency and throughput, with some AI insights, this year’s model focuses more on AI. As I explained above, it also expands the definition of performance to include the human and systemic conditions that sustain it.

Here’s how the research evolved and what those changes mean for how you interpret your own engineering data.

Shift in Performance Models

In 2024, DORA measured success through a linear hierarchy: Elite, High, Medium, and Low performers. Each group was defined by the four core metrics. That includes lead time for changes, deployment frequency, change failure rate, and recovery time.

It was a simple model where faster and more stable teams ranked higher. But it captured only one part of the story.

In 2025, DORA moved away from ranking teams altogether. The new framework introduces seven team archetypes that blend delivery performance with human factors such as burnout, friction, and perceived value. The goal is to describe patterns of system health, not to assign status.

I think this shift matters because it reframes improvement: it shifts the focus from chasing “elite” status to addressing the specific constraints that limit progress in your context.

Expanded Measures of Performance

The 2024 report hinted at human outcomes (like burnout and satisfaction) but treated them as external to the four performance-based team archetypes.

The 2025 edition integrates them directly into the current seven team archetypes. Teams are now evaluated across technical and human dimensions. This includes team effectiveness, product outcomes, valuable work, friction, and stability.

This shift recognizes that throughput gains mean little if they come at the expense of sustainable performance and the people in your team.

As I see it, this is where modern engineering management is heading. You can’t separate system design from human experience anymore.

The AI Angle: From Chaos to Amplification

In 2024, AI was treated as a productivity lever, but the data showed some unintended consequences that we discussed here.

For example, teams using AI generated code faster, but also had more errors. The conclusion we reached was that AI created chaos in immature systems.

However, the 2025 results tell a more refined story.

In foundational or legacy-constrained clusters, where instability, burnout, and friction dominate, AI tends to amplify chaos because it’s speeding up unplanned work without improving value or reliability.

Teams constrained by process experience a different trap: their systems are stable, but bureaucratic; AI accelerates tasks, yet the surrounding inertia cancels its benefits.

By contrast, high-impact and pragmatic performers use AI as leverage, applying it to reduce toil and enhance flow across stable pipelines.

Stable and methodical teams integrate AI deliberately, treating it as a quality and consistency multiplier, while harmonious high-achievers show what real synergy looks like. These teams are using AI to support creativity, automation, and sustainable delivery without increasing burnout.

The insight is clear: AI doesn’t divide teams by technology access but by system health and cultural coherence.

Scale Continuous Improvement with Axify

AI is the new baseline, but the value it creates depends entirely on the systems that support it. In my experience, that’s where many teams get caught off guard. I’ve seen groups accelerate delivery with AI, only to uncover new bottlenecks in testing, coordination, or platform maturity.

That’s exactly where Axify comes in.

Axify acts as the continuous improvement layer that connects delivery data to organizational outcomes. With value stream mapping built into our platform, you gain visibility into how work actually flows through your system and where AI is making a measurable difference.

More importantly, we help you track both throughput and stability in line with the latest DORA frameworks. This ensures that speed doesn’t come at the expense of stability.

Axify also surfaces early signals of bottlenecks before they escalate into costly slowdowns. Lasting improvement depends on proactive, sustainable systems, not reactive fixes.

Book a demo to see how Axify helps you convert DORA insights into faster flow, stronger stability, and real business impact.

FAQ

What are the four DORA metrics?

The four DORA metrics are lead time for changes, deployment frequency, change failure rate, and time to restore service (now known as failed deployment recovery time). Together, they measure how efficiently and reliably your teams deliver software.

What is the State of DevOps Report?

The State of DevOps report is DORA’s annual research study on software delivery performance. It combines global survey data with statistical analysis to identify what drives high-performing engineering organizations.

Is DevOps still relevant in 2025?

Yes, but its meaning has evolved. DevOps today extends beyond pipelines and automation to systems thinking. This includes how teams, platforms, and AI interact to improve flow and value delivery. The fundamentals remain the same, but the scale and complexity have expanded.

What’s new in DevOps 2025?

The 2025 shift is about integration. DORA replaced the old elite-to-low model with seven archetypes that combine technical and human performance factors. It also introduces the AI Capabilities Model. It shows how data, platforms, and governance determine whether AI accelerates or destabilizes your delivery process.

How to measure DevOps performance?

You measure DevOps performance by combining delivery metrics with human indicators. In Axify, we bring these together by tracking DORA metrics alongside team health insights and flow metrics. This lets you see how fast your teams move and how sustainably they perform over a longer period.

![]()

.png?width=60&name=About%20Us%20-%20Axify%20(2).png)

.png?width=800&name=Axify%20blogue%20header%20(9).png)