AI adoption can show up in your day-to-day engineering work long before user-facing or business results do. Tooling decisions surface first in cycle time, review load, and delivery confidence.

Early tool rollouts can increase throughput while PR queues grow, security reviews slow, and rollback risk becomes harder to judge. That gap between activity and outcomes is where trust erodes, and budgets get questioned.

This article focuses on AI adoption as it actually happens in delivery teams. It explains how to recognize and measure real adoption, not just intent or strategy.

What Is AI Adoption?

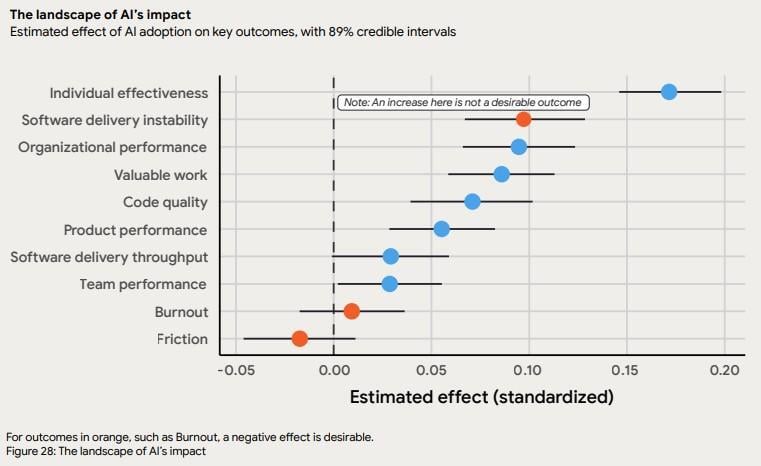

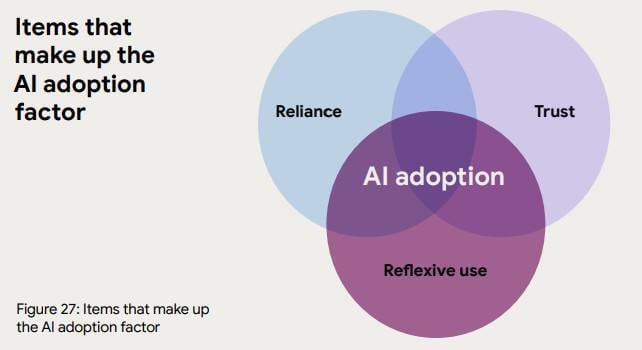

AI adoption describes the degree to which AI is integrated into day-to-day delivery workflows and decision-making. According to the State of AI-assisted Software Development 2025, AI adoption is measured as a composite of three indicators: it requires reflexive use, reliance, and trust.

- Trust: Measures whether engineers consider AI outputs reliable and useful enough for real delivery work.

- Reflexive use: Tracks whether engineers reach for AI by default in their workflow.

- Reliance: Measures the degree to which your team depends on AI to do their work effectively. For example, it shows whether work slows down, quality or performance drop, or certain tasks are less feasible when AI is unavailable.

At an organizational level, adoption deepens as generative AI tools move from ad hoc use into repeatable workflow steps. Once AI becomes part of repeatable delivery, clear ownership, policy coverage, and rollback paths have to be defined explicitly.

The experimentation stage looks different. Individual engineers try tools, usage spikes for a week or two, but results are likely to stay uneven. At scale, well-executed adoption changes how work moves through the delivery pipeline:

- PRs include AI-assisted diffs that reviewers expect.

- Tests generated by models run in CI as soon as validation passes.

- Security reviews know where prompts and code flow, and incidents have clear audit trails.

This transition from ad hoc use to predictable workflow integration is the AI adoption process leaders actually manage. It affects review expectations, failure modes, and how confidently teams ship changes.

Here, you can see how AI impacts an engineer's work:

Source: State of AI-assisted Software Development 2025

Source: State of AI-assisted Software Development 2025

Next, let's see what adoption improves.

Benefits of AI Adoption

AI is an amplifier, according to the DORA 2025 report.

“AI’s primary role in software development is that of an amplifier. It magnifies the strengths of high performing organizations and the dysfunctions of struggling ones.”

(DORA, State of AI-assisted Software Development, 2025)

In other words, AI adoption supports how work already flows through different stages. Strong review habits, clear ownership, and stable pipelines tend to improve. Weak processes tend to fail faster.

Here are the tangible benefits leaders see when AI is properly embedded into daily engineering work on top of strong, well-established workflows:

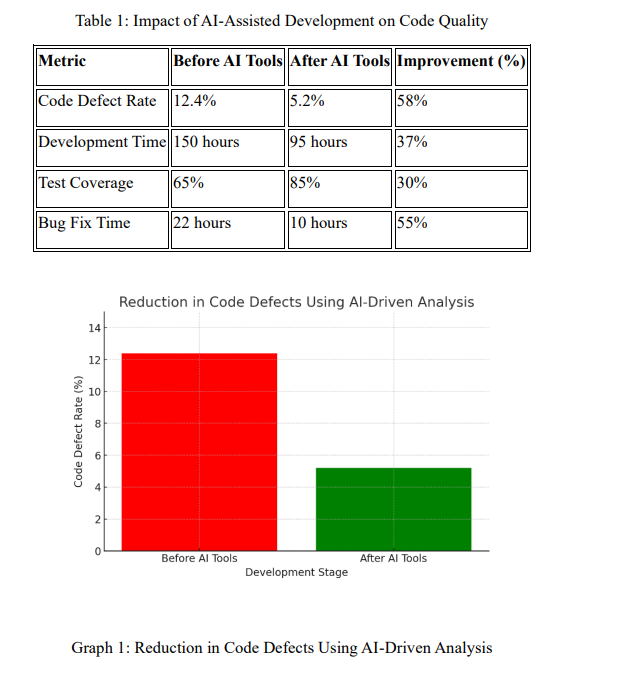

- Improved customer experience: Delivery improvements lead to customer-facing gains. When teams shorten feedback loops and reduce defects reaching production, product quality improves. Indeed, one empirical study in JoCAAA found defect rates dropped from 12.4% to 5.2% (a 58% reduction) after AI-assisted development tools were introduced, thus improving reliability before release.

- Getting ahead of competitors: Organizations tend to see stronger returns when AI adoption is integrated into established delivery practices rather than layered on top of unstable workflows. Research shows that teams with higher AI adoption maturity report significantly higher returns from data and AI initiatives than teams still experimenting. In practice, the difference usually comes from how well AI is embedded into existing delivery standards, review processes, and release discipline.

- Profitability: Margin impact follows when engineering time isn’t spent on repeat corrections and manual handoffs. Reduced rework, fewer incidents, and shorter recovery windows create cost control that finance teams can trace back to delivery changes.

- Agility and scalability: Teams gain flexibility when routine steps no longer block releases. The same study in JoCAAA quoted above shows that average project completion time decreased from 150 hours to 95 hours (≈37% improvement) after introducing AI-assisted coding and debugging. Don’t cut corners on testing and reviews to improve throughput, though. AI-driven speed only counts as agility if it doesn’t come at the expense of quality, reliability, or recovery.

- Improved business operations: When engineering output becomes more predictable, other teams can plan their work more easily. Product, support, and operations don’t have to deal with constant rework or last-minute changes..

- Workers become more efficient: Routine AI usage can increase efficiency in teams with solid workflows and Agile practices. The State of Enterprise AI reports time savings of roughly 40-60 minutes per day, with heavy users exceeding 10 hours per week. That time usually shifts into reviews, mentoring, and backlog cleanup.

Moving on, let's see how adoption evolves across organizations.

AI Adoption Stages in Engineering Organizations

From our experience, AI adoption rarely moves in a straight line across teams. Adoption usually spreads unevenly, shaped by delivery pressure, ownership gaps, and tooling choices.

That brings us to the first stage.

Awareness and Experimentation

Early activity starts with individual engineers testing tools on their own.

According to Stack Overflow’s 2025 Developer Survey, 84% of developers report using or planning to use AI tools in their development workflow, and 51% use these tools daily. Sometimes, this decision to adopt AI isn’t made at an organizational level, but rather at the developer level.

In this case, local scripts, editors, and prompts appear in isolation, usually outside shared standards.

Without common rules, usage varies by repository and role. Review load increases as output differs across contributors, and security teams lack visibility into where generated code or prompts move through the system.

Pilot Programs and Tool Sprawl

Pilots typically follow once leadership introduces structure. Multiple tools are rolled out in parallel to cover different use cases, but comparisons remain shallow. Metrics often focus on usage volume rather than changes in delivery behavior.

As a result, teams struggle to distinguish tools that reduce rework from those that introduce friction. Tool sprawl becomes harder to unwind as licenses, workflows, and habits spread.

Operational Adoption

Operational adoption begins once AI is integrated into daily delivery workflows. Pull requests assume AI-assisted changes, and tests generated by models run in CI once validation completes.

Usage patterns stabilize, making exceptions easier to spot. Security controls, ownership, and rollback paths start aligning with how work actually moves through the pipeline.

Scaled Adoption

Scaled adoption reflects deliberate AI readiness and adoption across teams. Standards stay consistent, behavior gets tracked over time, and decisions rely on observed workflow changes.

At this stage, AI usage is no longer ad hoc. Expectations are clear, impact is measurable, and teams are accountable for how AI affects delivery outcomes.

Up next, we'll go over what actually drives engineer adoption.

AI Tool Adoption by Engineers: What Actually Drives Usage

AI tool adoption increases when tools make day-to-day delivery work easier to execute. Usage rises when, after AI adoption:

- Pull request reviews require less back-and-forth.

- CI behavior remains predictable.

- Engineers spend less time context switching during active delivery.

Friction appears just as quickly when tools introduce additional prompts, dashboards, or approval steps that slow down merges or increase review effort.

Remember: This is why AI tool adoption by engineers tends to correlate more strongly with workflow fit than with feature depth. Capabilities matter less than whether a tool integrates cleanly into existing delivery paths.

Leadership intent still plays a role, but intent alone does not change behavior.

Usage stabilizes when:

- Standards align with how teams already work, and

- Expectations remain consistent across repositories and roles

Trust develops when:

- AI-generated output behaves predictably under deadline pressure.

- Common failure modes are visible before they result in incidents.

Transparency and reversibility complete the feedback loop.

Adoption declines when teams lack visibility into where generated code flows, how prompts are stored, or what security issues surface during reviews and audits.

Confidence increases when rollback paths are clearly defined and training focuses on review quality rather than tooling mechanics.

For a practical example, Matt McClernan’s short talk shows what AI adoption looks like inside engineering teams:

Next, where coding tools help and where they hurt.

AI Coding Tools Adoption: Opportunities and Risks

AI coding tools tend to be adopted fastest where delivery pressure is highest, because teams look for ways to reduce manual effort under tight deadlines. Let’s say you adopt an AI agent, and code moves more quickly through reviews and validation without increasing rollback or incident risk. In this case, you will be more likely to increase its usage (And the opposite is also true).

That being said, as adoption spreads across teams, leaders tend to see a consistent set of opportunities and risks.

Common AI Coding Tool Use Cases

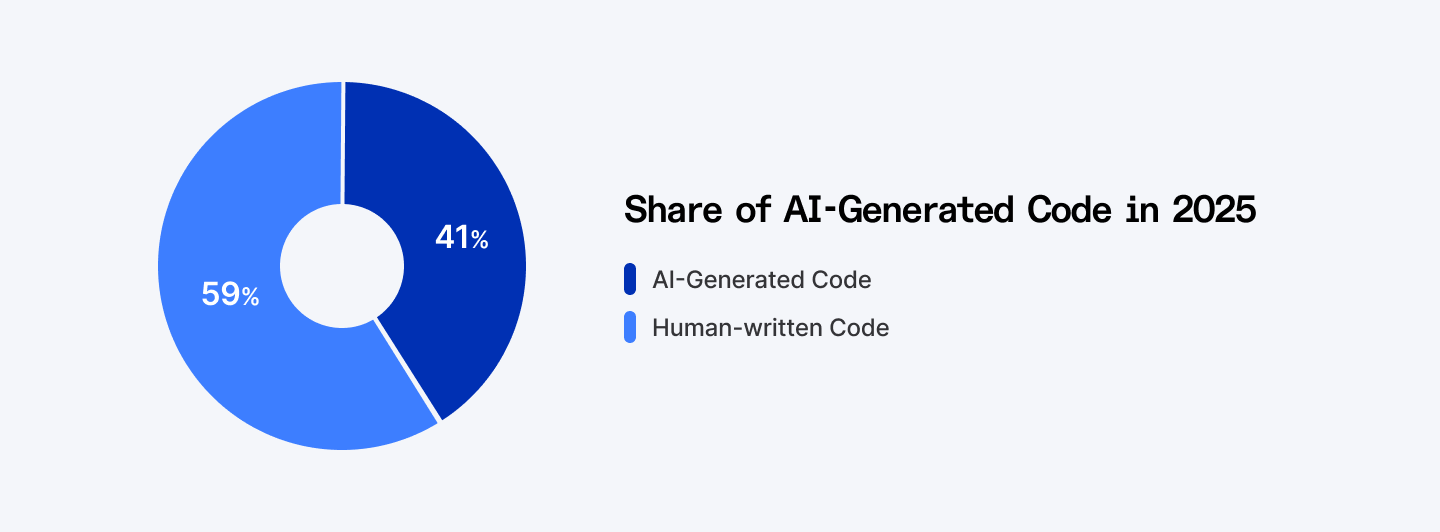

Most companies are more likely to adopt AI coding tools for code generation and refactoring because they remove manual steps directly from the development workflow. This trend is already visible in data: Surveys indicate that about 41% of code flowing through development workflows is now AI-generated.

That level of AI-generated code moves review work earlier in the development lifecycle, focusing on validating intent, correctness, and risk before merge rather than fixing issues after the fact.

As a result, teams tighten expectations around diff quality, test relevance, and clear ownership of AI-assisted changes.

Source:AI agents for test generation tend to be adopted early, too, for the same reasons.

Source:AI agents for test generation tend to be adopted early, too, for the same reasons.

Teams use AI to reduce the manual effort of writing tests, which can increase coverage more quickly. However, failures still require human judgment to determine which tests protect intended behavior and which introduce noise.

Lastly, documentation and onboarding support reduce ramp-up time, so AI tools can fit well here. Natural language processing systems can summarize repositories, pull requests, and decisions so new engineers can review context without having to reconstruct it from scattered commits, PR threads, and tickets.

Risks Specific to Engineering Teams

First, risk grows when unjustified over-reliance on AI tools leads to skill atrophy. This happens when engineers stop reasoning through changes before merge.

A second risk is that some teams may lean heavily on AI tools while others avoid them, leading to inconsistent usage patterns. This complicates cross-team reviews and shared libraries.

Lastly, hidden quality and security issues present another sharp edge.

One data point makes this clear. Studies report that roughly 45% of AI-generated code contains security flaws according to Veracode.

As such, risk can be pushed into review queues and incident response, which shapes the real-world impact of artificial intelligence on delivery reliability.

Criteria Companies Use to Evaluate and Adopt AI Tools

Tool evaluation fails when decisions focus on feature lists instead of how work actually moves through PRs, CI pipelines, and reviews. For that reason, the criteria companies use to evaluate and adopt AI tools tend to cluster around technical fit, workflow impact, and risk exposure.

These criteria map directly to how changes move through pull requests, validation pipelines, and production reviews.

Technical Criteria

Technical fit determines whether a tool holds up under real production constraints. At this level, the focus stays narrow and practical. Here are the core technical checks leaders rely on:

- Integration with existing dev tooling: Compatibility with repositories, CI pipelines, and review systems matters because fragmented integrations introduce manual steps. Gaps here increase merge delays and operational overhead.

- Model transparency and data handling: Engineering leaders need to know where generated code comes from, whether prompts are stored, and how long logs are retained. During a production issue, teams must be able to trace whether an AI-generated change contributed to the defect. If the tool does not expose that information, root-cause analysis becomes slower and policy enforcement becomes inconsistent.

- Performance and reliability: Latency, failure modes, and fallback behavior matter during peak load. Unclear limits surface as instability during reviews and deployments.

Workflow and Adoption Criteria

Even technically sound tools fail when they introduce friction into daily delivery work. For that reason, adoption criteria should focus on how tools behave inside day-to-day engineering workflows, such as:

- Fit with real engineering workflows: Tools must align with how code is written, reviewed, tested, and released. Misalignment shifts effort into coordination work (extra reviews, handoffs, and follow-ups) instead of delivery.

- Cognitive load and context switching: Extra prompts, dashboards, or manual checks slow engineers mid-task. Those interruptions compound across teams and sprints, increasing cycle time and review load.

- Ease of onboarding and rollback: Simple enablement and clean rollback paths reduce resistance. Difficult exits turn pilots into long-term operational risk.

Governance and Risk Criteria

Good governance determines whether AI adoption remains under control as usage scales. Here are the risk checks that guide long-term decisions:

- Security and IP protection: Control over data flow and access boundaries limits exposure during incidents.

- Auditability and policy enforcement: Enforcement matters because AI tools are often adopted locally by teams before logging, access controls, and policy checks are consistently applied across the organization. AI governance data reports that 97% of AI-related breach victims lacked proper access controls, highlighting how governance gaps turn localized usage into organizational risk.

- Vendor lock-in risks: When tools become deeply embedded in workflows and data formats, switching later becomes costly and reduces leverage in pricing and contract negotiations.

AI Adoption Metrics: How Leaders Know If AI Is Actually Used

AI adoption is not measured by usage alone. Instead, it is inferred by observing how delivery behavior changes under comparable conditions. Rather than counting prompts or tool activations, effective adoption metrics compare work completed with AI assistance to similar work completed without it. Of course, you need to do it across the same teams, pipelines, and review standards.

These comparisons help leaders determine whether AI affects KPIs like cycle time, review effort, defect rates, or delivery reliability.

AI Adoption Dimensions

Adoption shows up as a pattern of behavior. From what we've seen in The 2025 State of AI-Assisted Software Development report, it describes three tightly linked dimensions that explain whether AI is truly embedded in daily work:

- Reliance: Reliance is high when work depends on AI to progress. For example, pull requests take longer when AI assistance is unavailable, test coverage drops and refactoring work stalls without that support.

- Trust: Trust is high when engineers accept AI-assisted changes frequently enough that review time remains predictable and rollback rates do not increase after release.

- Reflexive use: Reflexive use is present when AI becomes the default response to a task. Engineers reach for AI without prompts or mandates because it fits naturally into coding, testing, or review workflow.

Together, these signals show whether AI sits inside the workflow or remains optional.

Source: State of AI-assisted Software Development 2025

Source: State of AI-assisted Software Development 2025

Output Metrics vs. Adoption Metrics

Output metrics capture activity volume.

Commit counts rise, code diffs grow, and cycle time may appear shorter. However, these signals lose meaning when review queues lengthen, defects surface later in the release cycle, or senior engineers spend more time correcting AI-generated changes. In those cases, higher output reflects more activity, not more effective delivery.

Adoption metrics focus on behavior change inside the delivery pipeline.

Rather than counting usage events, they measure how work actually progresses from branch to merge to release. Feature access indicates availability, while adoption metrics reflect whether AI is relied on, trusted, and consistently embedded within real delivery steps.

Core AI Adoption Metrics for Engineering Teams

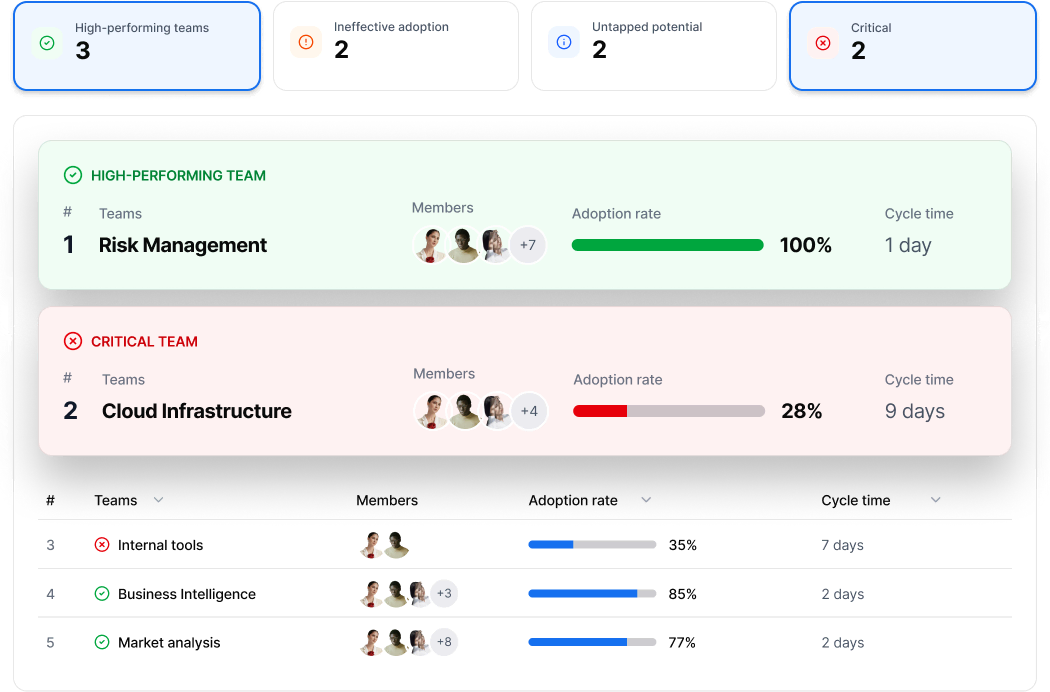

To evaluate adoption in practice, leaders track a small set of workflow-level signals that reflect how AI is used inside real delivery work:

- Usage consistency across teams: Consistency shows how uniformly AI is used across repositories, roles, and services. Large gaps signal uneven standards and rising coordination costs when shared libraries or services cross team boundaries.

- Adoption depth: Depth indicates where AI appears in the delivery workflow. Usage limited to code generation affects development speed differently than usage that extends into test creation, review preparation, or release documentation. Deeper adoption typically shifts review effort and validation expectations earlier in the lifecycle.

- Retention of AI-assisted workflows: Retention measures whether AI use persists beyond initial rollout. Continued use weeks after introduction signals that AI fits existing workflows. Drop-off after early enthusiasm points to friction, trust gaps, or unclear value during reviews.

Taken together, these signals show whether AI is changing how work moves through development and release or simply increasing activity without improving delivery.

How to Track Adoption Metrics of AI Tools

Tracking AI adoption works when signals reflect observable delivery behavior. Beyond that, we advise you to consider:

- Tool usage telemetry vs workflow-level signals: Telemetry shows which assistants run and how often suggestions are generated. Workflow-level signals show whether pull requests move faster, review depth changes, or rework decreases when AI is involved. The latter indicates whether AI is changing delivery behavior or simply increasing activity.

- Measuring adoption without micromanagement: Adoption stays stable when measurement avoids individual scoring. Team-level patterns across repositories, services, and roles give you usable insight without encouraging engineers to optimize for the metrics instead of delivery quality.

- Tracking trends over time instead of snapshots: Short-term spikes usually follow rollout announcements. Durable adoption appears when AI-assisted workflows persist across sprints, releases, and staffing changes, and when usage does not collapse after initial enthusiasm fades.

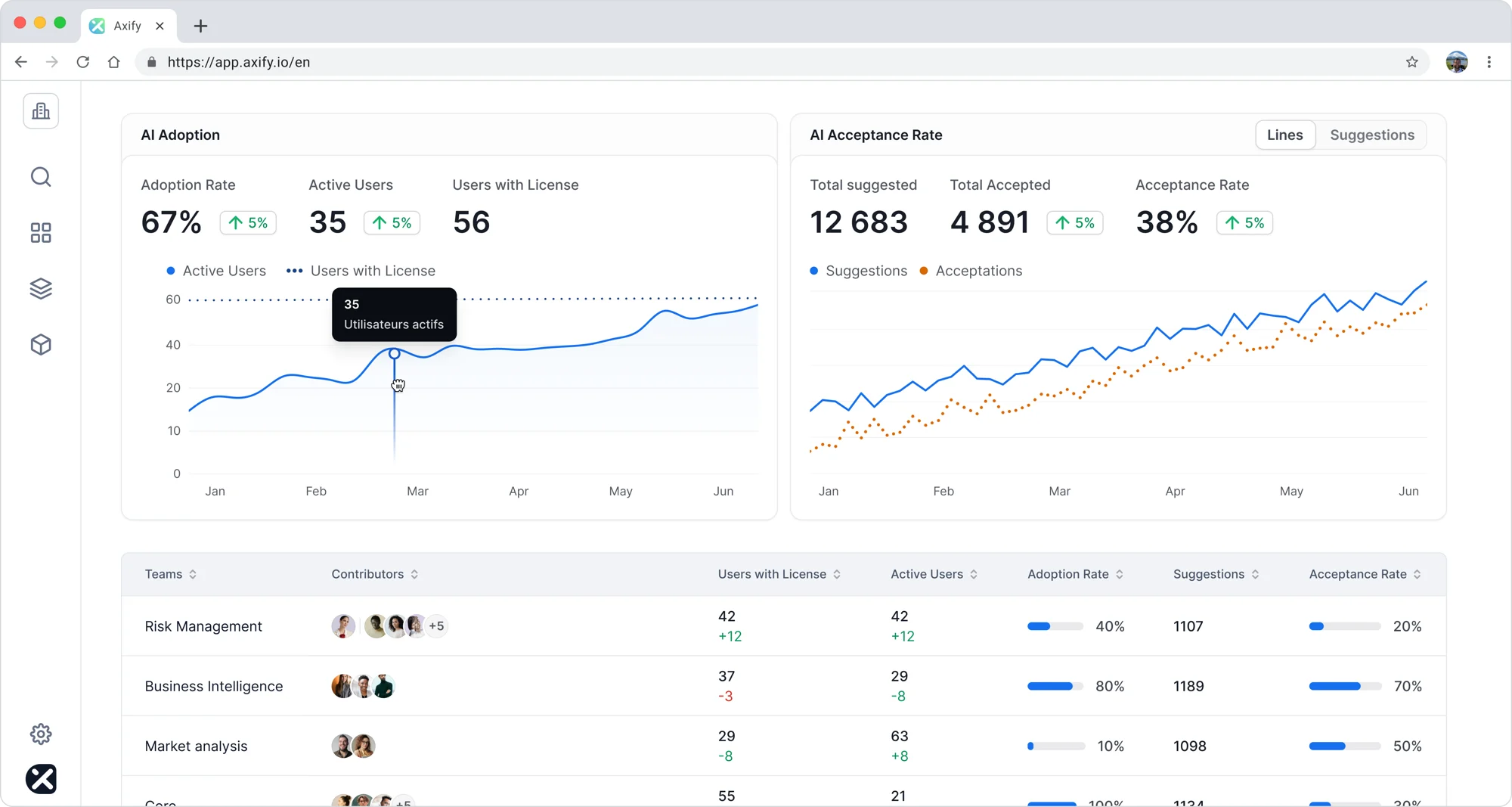

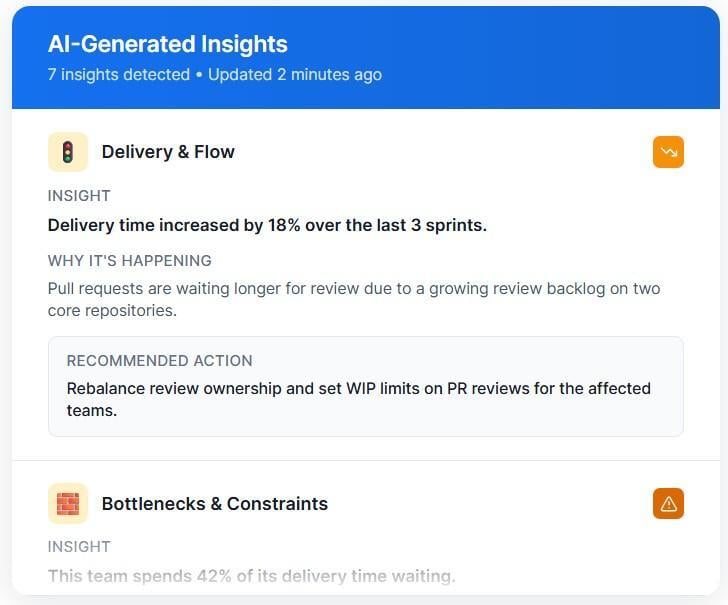

This is where Axify’s AI adoption and impact feature fits directly into your delivery system. Axify connects AI usage data with PR timelines, CI results, throughput, and quality signals. Then, it compares work completed before AI implementation with work completed after AI adoption.

That comparison makes adoption visible through your delivery KPIs. With that view, AI becomes a measurable change inside your engineering workflow.

Common AI Adoption Challenges in Engineering Teams

AI adoption inside engineering teams rarely fails because of tooling alone. More often, they appear when changes enter live workflows without clear checks on quality, risk, or decision ownership.

These are the challenges you usually face once experimentation turns into daily use.

Risk of Accelerating Process Steps

Delivery pressure frequently pushes teams to shorten steps in PR review, testing, or validation before understanding the downstream effect. Faster code generation can shift review load to senior engineers, increase rework, or raise rollback frequency if guardrails stay unchanged.

Remember: We believe that AI tools amplify weaknesses as well as strengths. In practice, weak reviews, unclear ownership, or thin test coverage become more costly as output increases. Faster generation pushes effort into reviews, fixes, and rollbacks when validation cannot keep pace.

That effect becomes visible when certain delivery KPIs improve without corresponding gains in stability or quality.

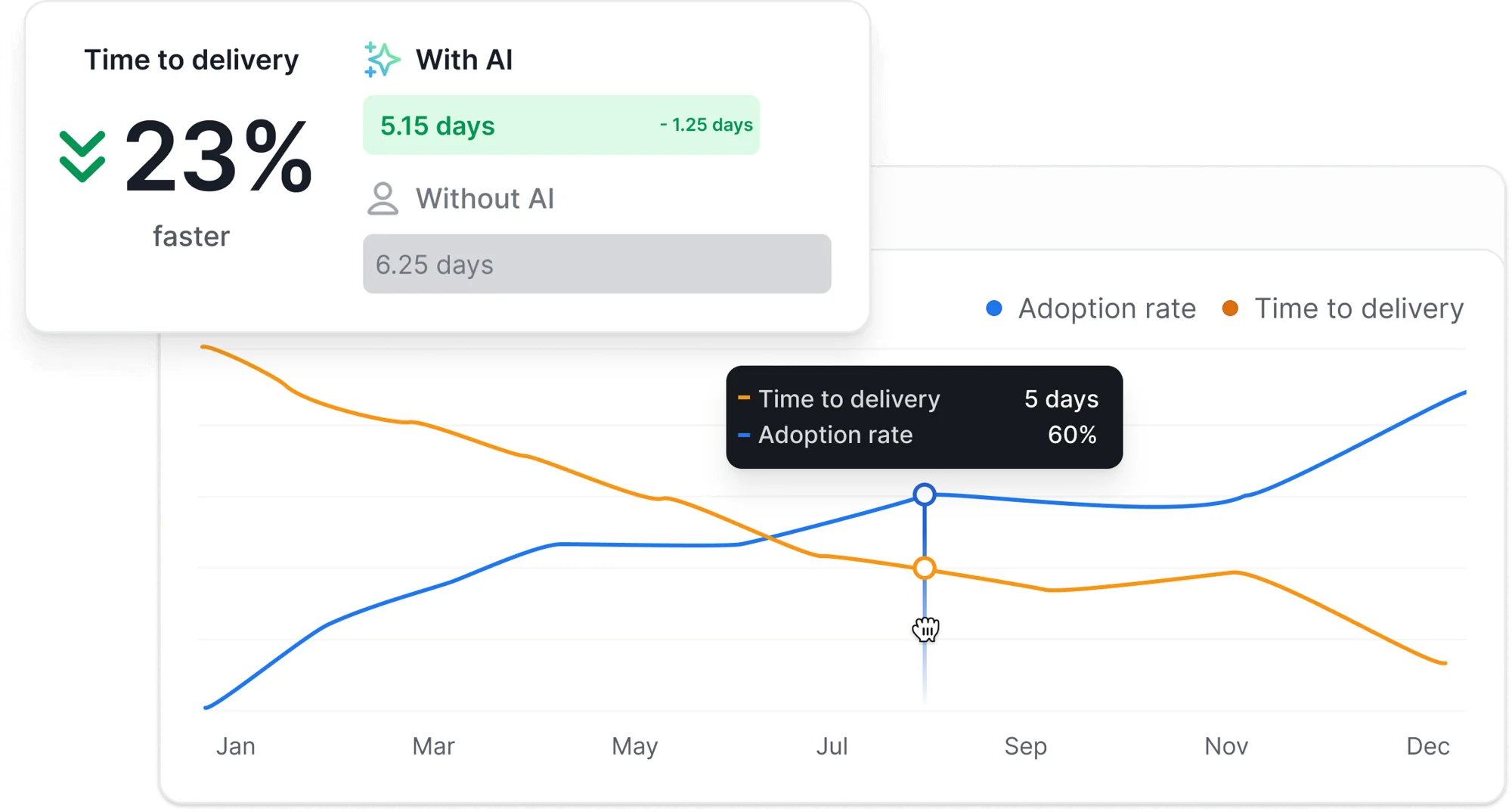

To measure whether acceleration improves or degrades delivery, Axify’s AI Impact feature compares work completed with and without AI under similar conditions. This allows changes in cycle time, rework, or rollback rate to be tied to actual behavior.

Data and Context Limitations

AI performance depends on context. When repository history, dependency structure, or workflow state fall outside the model’s context window, recommendations become incomplete or misleading.

Similarly, without visibility into test coverage gaps, ownership boundaries, or recent architectural changes, AI-generated suggestions lose precision and reliability.

Skills and Trust Gaps

Trust erodes when AI output cannot be explained or verified within normal workflows. As a result, engineers hesitate to rely on suggestions that bypass linting rules, violate patterns, or introduce unclear logic into shared code paths.

Resistance also increases when metrics feel punitive, such as tracking individual usage instead of team-level patterns tied to delivery behavior.

ROI and Value Attribution

Linking AI usage to delivery impact remains difficult without controlled comparisons. License counts or the number of AI suggestions you approve do not explain whether fewer defects ship or whether lead time improves. This gap matters even more when you consider that 42% of organizations already struggle with inadequate financial justification (according to the Ingenuity of Generative AI report).

The impact of AI on software development only becomes clear when you compare similar work with and without AI across PRs, pipelines, and releases. Axify supports this analysis by isolating AI-assisted work and connecting it to delivery KPIs that reflect code quality, stability, and throughput rather than output alone.

A Practical AI Adoption Framework for Engineering Leaders

AI adoption resists the test of time when you make decisions based on clear delivery signals. With that in mind, here is a practical framework you can use to introduce, measure, and scale AI inside real engineering workflows without guessing or overcorrecting.

Step 1: Start with Workflow Friction

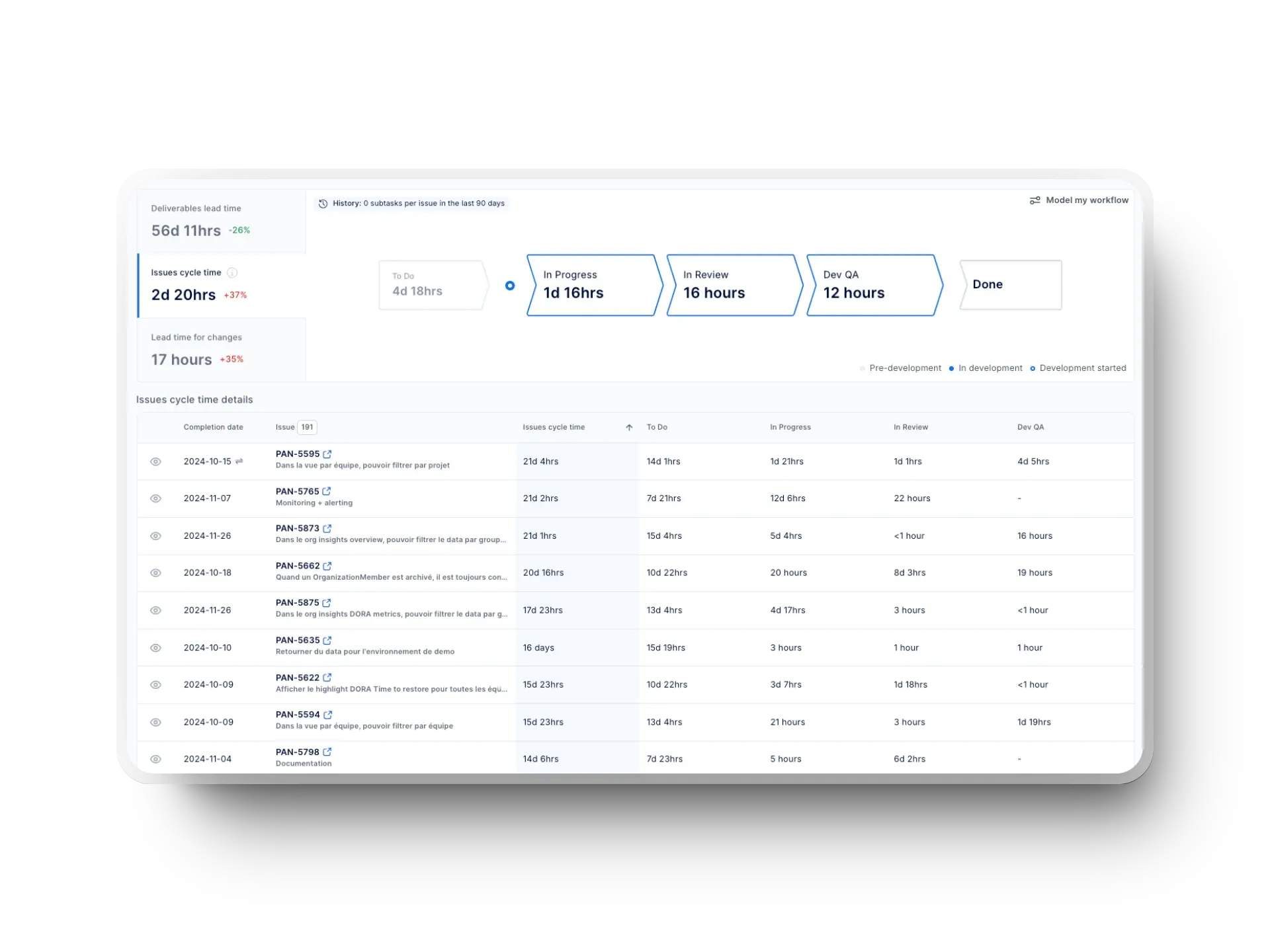

Every adoption effort should begin with where work already slows down. Bottlenecks usually show up in review queues, CI wait time, flaky tests, or unclear ownership during handoffs. Mapping those steps first gives you a baseline for comparison.

When friction is visible, AI has a defined role. Without that baseline, faster code generation typically shifts work downstream and increases rework rather than shortening delivery time.

Axify Value Stream Mapping tool can help you see those bottlenecks faster, and realize where implementing AI tools can make the most sense in your SDLC:

Step 2: Pilot with Clear Adoption Signals

Pilots fail when success remains undefined. Before rollout, adoption needs an operational definition tied to behavior.

That definition should answer questions like whether AI-assisted pull requests move through review faster, whether defect rates change after release, or whether rollback frequency stays stable. Clear signals also set boundaries, such as which repos, teams, or stages are in scope.

With those guardrails in place, experimentation stays controlled instead of spreading unevenly across the organization.

Step 3: Measure Adoption and Impact

Once a pilot starts, you must pick metrics that don’t simply capture the expected early usage spikes (caused by curiosity), and, instead, analyze actual long-term reliance.

As such, you will need to evaluate consistency across teams, depth inside the workflow, and durability over time.

- Consistency shows whether AI usage spreads evenly or concentrates in a few teams.

- Depth shows whether AI stops at code generation or extends into testing, review preparation, or documentation tied to releases.

- Durability shows whether usage holds after the initial rollout window closes.

Equally important, delivery impact should be measured alongside adoption. Comparing work completed with AI against comparable work completed without it inside the same pipelines shows whether cycle time, review effort, or rework actually change.

Pro tip: Check out our guide on the 17 best AI coding assistants to learn which tools can help you with this matter.

Step 4: Translate Metrics into Decisions

Metrics visibility is the first step; correct action based on them is equally important. When numbers move, the next question should always be why and what opportunities that brings.

In other words, you must connect changes in delivery KPIs to specific initiatives, tools, or process shifts. For example:

- A rise in cycle time may trace back to larger diffs entering review.

- Increased rework may be linked to shallow test generation.

- Stable throughput with a higher review load may signal uneven adoption across teams.

Axify’s AI-powered insights feature supports this step by analyzing delivery trends continuously and surfacing root causes. The system shows where time accumulates across code, review, test, and deployment steps, then links those shifts to changes in behavior.

Recommendations focus on what to adjust next, such as tightening review policies, limiting AI usage to certain stages, or standardizing tooling across repos. This approach helps you make better decisions based on metrics; other software engineering tools don’t offer you this option.

Step 5: Scale What Works, Remove What Doesn’t

Scaling should follow evidence, not subjective factors like enthusiasm or industry benchmarks. When adoption signals remain consistent and delivery impact holds across multiple cycles, expansion becomes a low-risk step.

At the same time, tools that fail to change behavior should be removed quickly. Treating AI tools as experiments rather than mandates keeps trust intact and prevents long-term drag on delivery systems.

With this framework, AI adoption stays grounded in workflow reality, delivery signals guide decisions, and scaling happens only after measurable proof.

Future of AI Adoption in Engineering Teams

AI adoption inside engineering teams is entering a more constrained phase. Early expansion focused on access and experimentation, while the next phase centers on control, comparability, and long-term reliability. With that shift in mind, several patterns are already taking shape.

Shift From Tool Proliferation to Consolidation

First, adoption is moving from tool proliferation to consolidation. Engineering organizations now face overlapping assistants, fragmented policies, and inconsistent review standards. As a result, consolidation has become an operational priority.

This shift shows up clearly in broader IT investment behavior. According to a Quickbase survey, 56% of respondents report spending more time and resources on IT consolidation. At the same time, 73% expect software investment to keep growing

This means consolidation does not signal less tooling. Instead, it reflects a tighter selection and fewer parallel systems competing inside the same workflow.

Governance Moving Closer to the Workflow and AI as Infrastructure

Governance is also moving closer to the workflow itself. Policies defined outside the delivery path tend to arrive late and get bypassed under pressure. When guardrails sit directly inside PR checks, CI validation, and access controls, compliance becomes embedded in your current work.

|

"Embedding governance into the workflow from the start rather than adding it afterward allows teams to innovate confidently,” |

Over time, this shift pushes AI toward infrastructure status. Instead of being treated as an optional productivity layer, AI becomes something teams depend on in predictable ways. That dependence increases scrutiny around failure modes, rollback paths, and auditability, especially as AI-assisted changes reach production more frequently.

From Axify’s perspective, we see this transition as a necessary correction.

As we said above, we see AI as an amplifier rather than a fix. Let’s remember that AI increases throughput where collaboration, review discipline, and architecture already hold up. At the same time, it exposes weak ownership models, shallow testing, and overloaded reviewers faster than before.

How AI Changes Delivery Outcomes Over Time

AI can make certain work stages faster, but that localized speed does not automatically improve global delivery speed. Code creation usually accelerates first, while code editing, review, and validation typically take longer as volume increases. This pattern shows up clearly in our guide about the impact of AI on software development, where faster generation shifts effort downstream instead of removing it.

That shift contributes to what many teams experience as a productivity illusion. Activity increases, but delivery efficiency does not improve at the same rate.

Our article on AI coding assistants shows how these tools shift where time is spent across the workflow. The analysis explains why time saved during code creation often reappears in review and rework.

AI also acts as a double-edged tool inside engineering systems. This tension is described directly in our guide on AI and developer productivity, which notes:

“One value proposition for adopting AI is that it will help people spend more time doing valuable work. That is, by automating the manual, repetitive, tedious tasks, we expect respondents will be free to use their time on “something better.” However, our data suggest that increased AI adoption may have the opposite effect—reducing reported time spent doing valuable work—while time spent on tedious work appears to be unaffected.”

These outcomes explain why training becomes a differentiator over time. Engineers who learn how to guide AI output, validate suggestions, and apply results within existing review and testing standards continue to improve.

Others plateau once initial speed gains taper off.

For that reason, Axify encourages upskilling tied to review quality, test coverage, and ownership discipline rather than prompt volume.

Finally, we encourage tracking AI adoption metrics and running controlled performance comparisons before and after AI implementation. Comparing work completed with AI against comparable work completed without it keeps decisions grounded in delivery behavior.

That approach supports deliberate adoption that remains reversible and aligned with how software actually ships.

Enable Sustainable AI Adoption with Engineering Visibility

AI adoption breaks down when changes in delivery behavior stay invisible to you. Without clear signals, activity looks high while review load, rework, or pipeline delays quietly increase. Because of that gap, visibility into real workflows becomes the control point.

With the right instrumentation, workflow data shows where AI shortens PR cycles, where it adds review overhead, and where it gets ignored after rollout. Those signals appear inside commits, pull requests, test runs, and deployments rather than in license counts.

Axify supports this approach by showing how work actually flows before and after AI tools enter the stack. Impact is measured across assistants like GitHub Copilot, Cursor, Claude Code, and others, with breakdowns by tool, team, and line of business.

For example, if you are testing Amazon cursor AI tool adoption, Axify lets you compare delivery behavior before and after rollout, without relying on anecdotes or surveys.

If sustained adoption matters to you, then contact Axify to see delivery behavior clearly.

FAQs

How long does AI adoption typically take in engineering teams?

AI adoption typically takes 3-9 months before it shows up in delivery behavior you can rely on. Early usage appears within weeks, but consistent use inside PRs, reviews, and pipelines takes longer. Sustained adoption only settles once teams trust the output and stop treating tools as optional.

What causes AI adoption to stall after pilot programs?

AI adoption stalls when pilots measure activity instead of delivery behavior. Usage spikes during trials, but fades once review load increases or quality issues surface. Without clear success criteria tied to cycle time, rework, or defects, pilots never turn into standards.

How do engineering leaders prevent AI tool sprawl?

AI tool sprawl is prevented by limiting tools to approved workflows with shared ownership and exit paths. Central visibility into where tools are used across repos and teams keeps comparisons grounded. Without that visibility, teams drift into parallel setups that cannot be evaluated side by side.

Should AI adoption be driven top-down or bottom-up in engineering organizations?

AI adoption works best when direction is set top-down and usage is shaped bottom-up. Leadership defines guardrails, metrics, and rollback rules, while teams validate fit inside real workflows. Either approach alone leads to resistance or fragmentation.

What are the early warning signs of failed AI adoption?

Early warning signs include uneven usage across teams, longer PR reviews, and rising rework despite faster code creation. Another signal appears when senior engineers spend more time correcting output than before. Those patterns indicate activity without behavior change.

.png?width=60&name=About%20Us%20-%20Axify%20(2).png)