AI is changing how work moves through DevOps, but tools alone do not shift delivery outcomes. What matters is where these tools intervene in your DevOps pipelines, and whether those interventions shorten review queues, reduce deployment delays, or stabilize incident recovery.

This guide focuses on the top AI tools for DevOps in 2026 and the metrics that reveal whether they actually improve performance. You'll find concrete use cases, measurable impact, and validation methods.

In the end, you'll know what tool to use next and where to apply it best.

Let’s begin.

What Are AI Tools for DevOps?

AI tools for DevOps are systems that apply artificial intelligence and machine learning at specific points in your software delivery lifecycle to influence how work moves. Their purpose is narrow and practical. They assist with code-level decisions, reduce manual handoffs, and expose signals you can validate through delivery and stability metrics.

However, according to the 2025 DORA Report, 90% of software professionals use AI tools at work, with 8% reporting a great deal of reliance. That gap pushes you to separate usage from impact before scaling.

You can check out this short video to learn how AI impacts the future of DevOps:

Next, let's see the practical use cases.

AI Tools for DevOps Use Cases

AI can help teams if it can change how work flows through the pipeline. In practice, that means specific interventions at known friction points. So, these are the core use cases where AI DevOps tools tend to show measurable effects, and where validation is possible.

Remember: We believe that AI typically amplifies existing strengths and weaknesses rather than correcting them by itself. As such, the benefits below are noticed in mature teams with effective workflows.

Code Assistance & Review

Code assistance and review sit early in the software development lifecycle, where delays compound quickly. AI enters this stage to ideally reduce waiting time and limit avoidable rework before changes move downstream. These are the main applications you see in practice:

- Code generation: AI supports routine coding and scaffolding tasks tied to well-defined patterns. When used carefully, AI coding assistants may shorten the time between intent and first working change. But we believe that validation still depends on review speed and defect rates rather than output volume.

- Automated reviews, risk scoring: AI scans pull requests to flag patterns linked to higher failure risk, such as oversized diffs or missing tests. The 2025 DORA Report shows that 56% of users apply AI to code reviews. That usage only translates into value when review wait time drops without raising rework costs.

- AI-enabled refactoring: AI proposes structural changes that reduce long-term maintenance risk. The signal to watch is whether cycle time stabilizes after refactoring. We don’t advise focusing on throughput metrics like the number of merged changes, since they don’t reflect long-term maintainability or future delivery speed.

Automation & Runbook Execution

You can automate work that follows the same path repeatedly. AI may reduce manual handling while keeping decision boundaries clear. These are the common patterns:

- Generating runbooks: AI can draft response steps from past incidents and documented fixes. The payoff appears when recovery steps become consistent and faster to execute.

- Auto-resolving repetitive tasks: AI agents handle predictable actions such as restarts or configuration updates defined through infrastructure as code. That trend is accelerating, since 64% of organizations apply AI agents to automate repetitive business workflows. The real check is whether human escalation drops without increasing failure rates.

- Intelligent routing: AI routes work or alerts to the right owner based on context, which reduces handoff delays during incidents.

Monitoring, Alerts & Observability

Monitoring is about signal quality and not volume. AI focuses on narrowing attention to what affects delivery and stability. You can use:

- AI for anomaly detection: It compares live behavior against historical observability data to surface deviations tied to real impact.

- Alert fatigue reduction: Grouping related signals helps limit unnecessary interruptions during on-call rotations.

- Predictive incident prevention: Patterns that precede incidents are flagged early. This shifts effort from reaction to preparation in incident management.

CI/CD Optimization

CI/CD optimization targets the time lost while changes wait to move forward. Here, AI is applied sparingly to avoid masking bottlenecks. Teams also use it for:

- Faster builds: AI may prioritize jobs and cache dependencies to reduce queue time during continuous integration.

- Intelligent pipeline tuning: AI can adjust pipeline paths based on change type. This can help critical fixes reach production sooner.

With that context, let's go over the top 10 AI tools for DevOps.

10 Best AI Tools for DevOps

The best AI tools for DevOps include Axify, GitHub Copilot, Tabnine, Snyk Code, and others that affect delivery flow and risk. Each tool targets a different constraint, from AI-powered IDE support to system-level engineering performance tracking.

These are the tools that you should evaluate today.

1. Axify: Best for Measuring the Real Impact of AI in DevOps

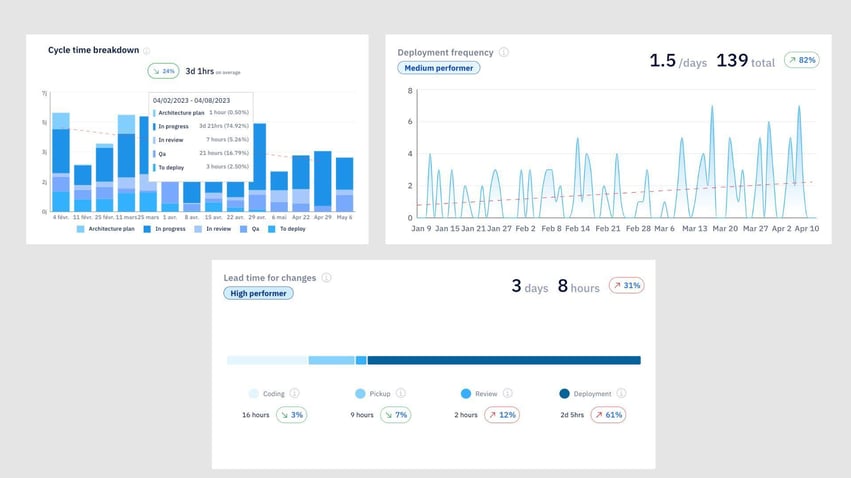

Axify gives you a way to evaluate how AI usage impacts actual delivery outcomes. Instead of assuming AI always helps, you measure your performance before and after AI implementation to see its actual effects.

Axify can compare delivery time, cycle time, review speed, DORA metrics, and adoption signals before and after AI, so changes stay visible across the full workflow.

This matters once tools like AI coding agents enter daily work, because they can shift bottlenecks rather than remove them.

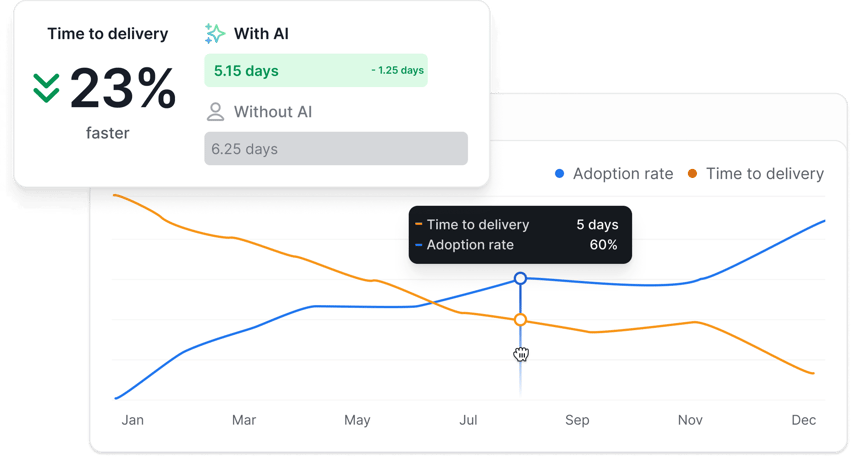

Axify’s AI Performance Comparison feature shows you exactly how your delivery changes as AI adoption rises. Here's how that looks:

Besides, Axify’s Value Stream Mapping tool identifies bottlenecks that AI can potentially address, as well as SDLC phases where AI implementation can make the most sense.

We encourage all our clients to start by understanding their current performance levels, the goals they want to reach, and the bottlenecks that hinder those goals.

For example, we worked with two teams at the Development Bank of Canada, who used Axify to diagnose where work stalled. As a result, delivery became up to 2X faster, pre-development time dropped by 74%, and QA time fell by 81%. Also, capacity increased by 24%, which translated into roughly $700,000 in annual productivity gains within three months.

Those outcomes tie directly to continuous inspection and data standardization. Use our metrics, AI performance comparison and VSM to make scaling decisions defensible from both engineering and cost & optimization perspectives.

Pros:

- Clear before-and-after views of AI impact on delivery and flow.

- Broad coverage of DORA and flow metrics in one place.

- Direct linkage between AI adoption and measurable outcomes.

- Easy-to-use Value Stream Mapping tool to identify bottlenecks and opportunities.

Cons:

- Focused on measurement rather than automation.

Pricing: Free option available. Paid plans are now modular, with each module priced at $19 per month. The bundle package is $32/ contributor/ month.

2. GitHub Copilot: Best for AI Pair Programming

GitHub Copilot works as an AI assistant inside your IDE, where it suggests code based on the files and context already open. The tool focuses on accelerating routine coding and development tasks, such as scaffolding functions, writing configuration snippets, or drafting test code.

In practice, it affects how quickly code changes move from idea to first implementation rather than how they perform after review or release. That distinction shows up in large enterprise usage. Accenture reports that many thousands of developers rely on Copilot, with most saying coding feels more engaging and a large share using it daily.

That level of adoption signals habit formation, but it does not explain whether downstream review time, defect rates, or rework shift as a result.

Pros:

- Inline code suggestions reduce time spent on repetitive edits.

- Tight IDE integration keeps work inside the editor.

- Natural language prompts support faster drafting.

Cons:

- Suggested code still requires full review for correctness.

- Output quality varies based on context and prompt clarity.

- No visibility into delivery or stability outcomes.

Pricing: A free option is available, but the pro plan starts at $10 per month.

3. Tabnine: Best for On-Premises AI Coding

Tabnine focuses on AI-assisted coding in environments where code must stay inside your infrastructure. It runs as an AI code assistant inside common IDEs and supports on-premises or private deployments, which matters when data control affects software development decisions.

The tool offers code completion and suggestion quality. That scope makes it relevant when policy or compliance limits external services, but it leaves validation of downstream impact outside the tool.

Pros:

- On-premises deployment supports strict data control needs.

- Model training on internal repositories reflects team conventions.

- IDE coverage keeps usage consistent across teams.

Cons:

- Suggestions vary in quality without ongoing tuning.

- Setup adds operational effort for self-hosted use.

- No visibility into delivery or release effects.

Pricing: It starts at about $59 per user per month (paid annually).

4. Snyk Code: Best for AI-Accelerated Security Scanning

Snyk Code provides static analysis that runs early in the software lifecycle, where security gaps are cheaper to address. It applies machine learning to scan source code and suggest fixes inside developer workflows to keep checks close to where changes are made.

In one case study, Flo Health integrated Snyk across a large set of repositories and containers. This shifted remediation from long backlogs to near-immediate action and increased the share of commits passing security scans without delaying software releases.

Pros:

- Static analysis runs close to commit time.

- Fix suggestions are attached to detected issues.

- Integrates with common CI paths and editors.

Cons:

- Scan results can require tuning to reduce noise.

- Multiple Snyk modules may overlap in findings.

- The Enterprise plan is very expensive.

Pricing: A free option is available, but the team plan starts at $25 per developer per month.

5. PagerDuty AIOps: Best for Alert Correlation & Incident Routing

PagerDuty AIOps sits in the incident response layer, where alert volume slows decisions and increases handoffs. Its system groups related signals, suppresses duplicates, and routes incidents based on prior context to reduce manual triage.

The outcome you evaluate is whether response focus improves without hiding real issues. Then you assess whether AI-driven insights shorten time to action instead of reshuffling alerts.

IAG Loyalty applied PagerDuty AIOps to reduce alert noise across teams. The change surfaced context from past incidents, sped up resolution, and reduced time spent on manual sorting. This supported a measurable reduction of manual work during on-call periods.

Pros:

- Alert correlation groups related events into a single incident.

- Routing uses historical context to assign ownership.

- Noise suppression reduces repetitive paging.

Cons:

- Add-on pricing scales with event volume.

- Initial tuning is required to avoid over-grouping.

- Value depends on consistent ingestion across tools.

Pricing: The AIOps add-on starts around $799 per month.

6. Datadog AIOps: Best for Predictive Monitoring

Datadog AIOps applies machine learning to telemetry to surface anomalies before they escalate. The focus stays on patterns across metrics, traces, and logs, with correlations that point to likely sources of failure. This setup matters when early signals affect resource scaling decisions or expose risk tied to cloud spend rather than isolated alerts.

In practice, Compass expanded its use of Datadog monitoring to shorten recovery for severe incidents. Resolution shifted from hours to minutes, which changed how teams staffed on-call work and prioritized fixes for each software engineer involved.

Pros:

- Anomaly detection adapts to changing baselines.

- Correlation links symptoms to likely causes.

- Signals appear early enough to act.

Cons:

- Alerts can still require validation in dynamic systems.

- Costs rise with higher telemetry volume.

- Explanations may need manual follow-up.

Pricing: A free option is available, but infrastructure monitoring starts at about $15 per host per month.

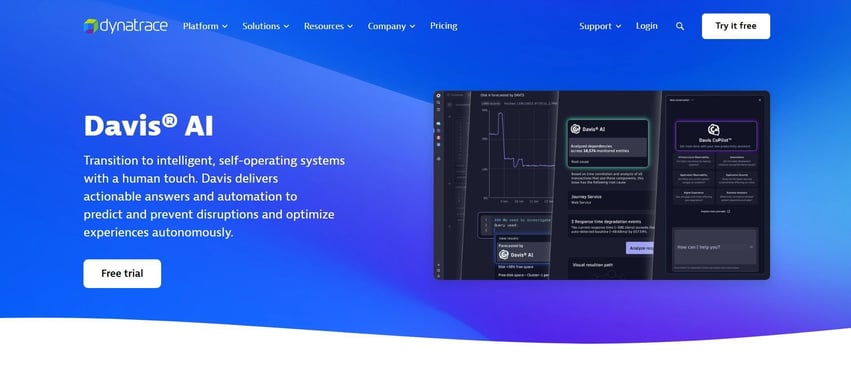

7. Dynatrace Davis AI: Best for Automated Root Cause Analysis

Dynatrace Davis AI identifies root causes across complex systems without manual correlation. The platform analyzes dependencies across services and infrastructure to open a single problem record with causal context.

This approach affects how incidents are handled during release orchestration and how teams decide what to fix first inside AI-powered pipelines.

BMO adopted Dynatrace to support a digital-first operating model. Teams reduced the time spent finding root causes, lowered manual log analysis effort, and redirected time from ad-hoc problem remediation to planned work aligned with Agile practices.

Pros:

- Automated causal analysis groups related symptoms.

- Business impact signals help prioritize incidents.

- Full-stack context reduces manual correlation.

Cons:

- Platform depth creates a learning curve.

- Licensing and data units add cost complexity.

- Agent deployment increases operational footprint.

Pricing: Infrastructure monitoring is $29 per host per month.

8. Harness AI: Best for AI-Enhanced CI/CD Pipelines

Harness AI focuses on how work moves through CI/CD once code is ready to be validated and released. The platform applies machine learning to test selection and deployment verification, so pipeline decisions depend on the change context rather than static rules.

That approach affects testing prep and how release risk is evaluated before changes reach users. The scope stays inside the Harness platform, which means AI acts on pipeline data already under its control.

At Citi, Harness was used to restructure delivery across a large engineering organization. Release execution shifted from long, staged rollouts to rapid, automated paths aligned with defined acceptance criteria, while keeping control points in place.

Pros:

- Test selection adapts to change context.

- Deployment verification checks runtime signals.

- Pipeline behavior stays consistent across teams.

Cons:

- AI features depend on historical pipeline data.

- Adoption requires standardizing on the Harness tooling.

- Advanced capabilities increase configuration effort.

Pricing: A free option is available, but you need to contact sales for the paid plans.

9. Ansible Lightspeed: Best AI Tool for DevOps Automation

Ansible Lightspeed applies generative AI to infrastructure automation. It focuses on how playbooks are written rather than how systems behave at runtime. The tool translates intent into Ansible syntax through dynamic prompts, which changes how automation code is created and reviewed.

That makes it relevant when standardization and speed matter more than deep delivery analysis. The scope stays limited to Ansible content, so the impact depends on how widely automation is already used.

Pros:

- Natural language input generates Ansible tasks and roles.

- Suggestions follow common Ansible patterns.

- Embedded directly in existing Ansible workflows.

Cons:

- Requires Ansible Automation Platform licensing.

- Cloud-based prompts may conflict with strict policies.

- Output still needs a full review before execution.

Pricing: Custom quote.

10. CircleCI AI: Best for Pipeline Optimization

CircleCI AI reduces friction in build and test pipelines. The system assists with pipeline configuration, test distribution, and failure analysis based on historical execution data.

That changes how quickly feedback reaches engineers and how reliably pipelines behave under parallel load. The effect depends on data quality and how consistently pipelines run.

At Maze, CircleCI supported a shift from infrequent releases to rapid, repeatable delivery. Lead time dropped sharply as pipelines became more predictable and less constrained by slow tests.

Pros:

- Test splitting balances execution across parallel jobs.

- Pipeline guidance reduces configuration errors.

- Failure analysis points to recurring slow steps.

Cons:

- AI features have limited availability across accounts.

- Results depend on sufficient historical pipeline data.

- Faster pipelines can increase credit consumption.

Pricing: A free option is available, but the performance plan starts at $15 per month.

What to Measure When Using AI Tools for DevOps Engineers

When using AI tools for DevOps engineers, you need to measure delivery, stability, efficiency, and adoption metrics. These categories tell you whether AI changes flow, reduces risk, or simply shifts effort.

These are the metrics that matter most.

Delivery Metrics

Delivery metrics show whether work moves faster without creating downstream friction. These are the signals to track:

- Cycle time: It reflects how long work takes from start to completion inside the pipeline. And the top 25% of successful engineering teams achieve a cycle time of 1.8 days.

- Lead time for changes: This measures how long it takes for a code change to move from commit to running in the production environment. According to the DORA 2025 report, 9.4% of teams achieve lead time for changes in less than one hour.

- Deployment frequency: The metric captures how frequently validated changes reach production. Here, the DORA 2025 report reveals that 16.2% of teams deploy on demand.

Stability Metrics

Stability metrics confirm whether speed holds under failure conditions. So, these indicators prevent false confidence:

- MTTR (Failed Deployment Recovery Time): MTTR measures how quickly the service recovers after a failed deployment. In this context, the DORA 2025 report shows that 21.3% of teams recover in less than one hour.

- Change failure rate: This tracks how frequently deployments cause incidents or rollbacks. According to the DORA 2025 report, 8.5% of teams have failure rates between 0% and 2%.

Efficiency Metrics

Efficiency metrics surface where time is lost inside the workflow. You should track:

- Review speed: It shows how long changes wait before approval. This metric reveals whether AI reduces backlog or increases review load.

- Build time: The metric measures how long pipelines run before validation completes. Changes here indicate whether AI reduces queue pressure or masks slow stages.

- Automation coverage: Automation coverage tracks how much of the pipeline runs without manual steps. This helps you see whether AI removes effort or creates new oversight points.

Adoption Metrics

Adoption metrics separate usage from impact. Here are the metrics you can track:

- Percentage of AI-assisted commits: This metric shows how frequently AI participates in code creation. It answers how widespread usage is and not whether outcomes improved.

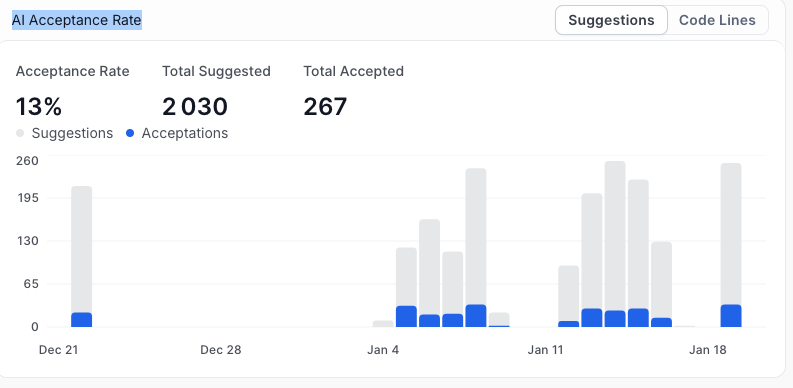

- AI acceptance rate: Acceptance rate measures how often AI-generated suggestions are actually used by developers. This helps distinguish passive exposure from real adoption and surfaces whether teams trust the output enough to integrate it into production work.

Pro tip: Axify visualizes this through its AI Acceptance Rate dashboard, which shows total suggestions versus accepted changes over time.

- AI adoption rate: The AI adoption rate measures active users versus licensed users. Axify tracks this directly, which lets you compare adoption trends against delivery and stability shifts.

Best Practices for Implementing AI Tools in DevOps

AI works when it fits cleanly into how delivery already operates. The goal here is controlled change, clear validation, and fast rollback if results move the wrong way.

So, here are the DevOps best practices that consistently hold up in production environments:

- Start with low-risk workflows: Begin where failure has a limited blast radius, such as code suggestions or test selection. This lets behavior surface without affecting release stability or on-call load.

- Establish a performance baseline: Capture cycle time, review wait time, build duration, and recovery speed before AI enters the workflow. Without that baseline, later changes cannot be attributed with confidence.

- Validate all AI output: Treat AI-generated output as input rather than authority. Reviews, tests, and checks stay mandatory, especially where configuration or infrastructure changes are involved.

- Monitor regressions or new bottlenecks: Watch for shifts in queues, review depth, or failure patterns. In our experience, faster upstream work can create slower downstream handoffs if not tracked closely.

- Use Axify’s AI Comparison Feature before scaling: You can compare performance with and without AI across the same teams and timeframes. That comparison confirms whether flow actually improves before expanding usage across groups.

Turning AI Adoption Into Measurable Delivery Gains

AI tools change specific parts of the delivery pipeline, but only measured outcomes justify wider use. What matters is whether review queues shorten, deployments move sooner, incidents recover faster, and noise drops without hiding risk.

So decisions stay grounded in evidence rather than tool claims. When adoption is paced, validated, and compared side by side, scaling becomes a controlled step rather than a leap of faith.

To verify impact before expanding usage, contact Axify and evaluate results across teams with clear, comparable data.

.png?width=60&name=About%20Us%20-%20Axify%20(2).png)